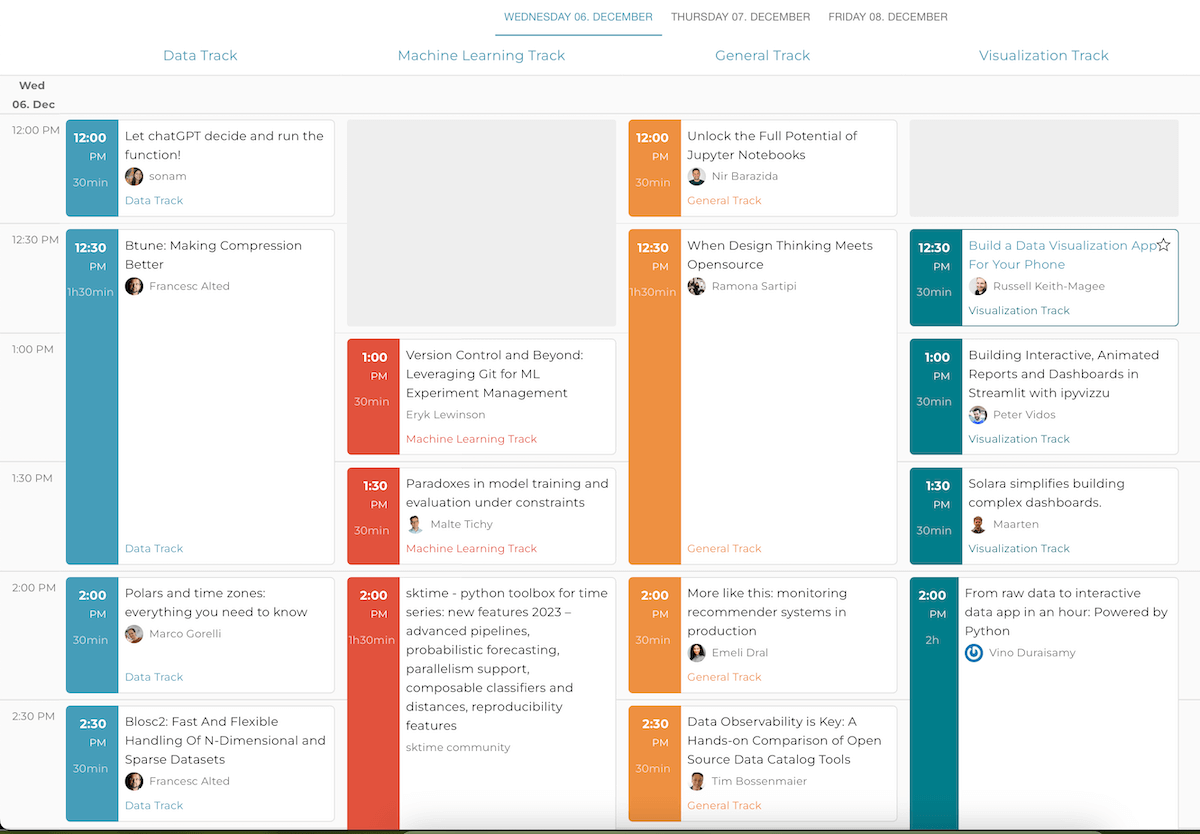

PyData Global will take place on December 6-8, 2023. Here, the most important data and AI topics will be taught practically with Python and many other open source technologies. We provide a practical overview.

PyData Global brings together data engineers, scientists and practitioners

Key Insights / TL;DR

|

Overview of the most important data technologies and topics

Anyone working with data, Python and AI in a company or privately needs an overview of many specializations. This is where PyData Global comes in handy, as it really does cover a huge range of important fields. It is particularly helpful that the focus is on open source technologies, so there are no cost barriers to entry.

The main focuses of PyData are:

- Data Engineering

- Machine learning

- Data Visualization

- Large Language Models

- General data topics Application areas

We have divided the main topics into practical subject areas and summarized and linked the most important technologies. This way you can learn what the hot topics are even without having been to the conference.

Topic 1: Data

Data processing and performance optimization

This is all about efficient data processing and optimization. The sessions will highlight the latest developments in popular frameworks such as Pandas, Dask, and Polars. Solutions around huge data sets, GPU acceleration and Python workflows for data processing will be shown.

Sessions:

- All Them Data Engines: Pandas, Spark, Dask, Polars and more – Data Munging with Python circa 2023

- An Introduction to Pandas 2, Polars, and DuckDB

- Pandas 2, Dask or Polars? Quickly tackling larger data on a single machine

- Data of an Unusual Size: A practical guide to analysis and interactive visualization of massive datasets

- Optimize first, parallelize second: a better path to faster data processing

- cudf.pandas: The Zero Code Change GPU Accelerator for Pandas

- We rewrote tsfresh in Polars and why you should too

Innovative applications and data handling techniques

From improving data compression with Btune and Blosc2 to the latest developments in FastAPIfordata engineers and scientists, these sessions offer insights into exciting new tools and methods for data processing. In addition, LanceDB, a Vector database for text/image/audio/multimodal AIs, will be presented.

Sessions:

- Btune: Making Compression Better

- Blosc2: Fast And Flexible Handling Of N-Dimensional and Sparse Datasets

- How I used Polars to build functime, a next gen ML forecasting library

- Arrow revolution in pandas and Dask

- API development for data analysts/scientists with FastApi

- LanceDB: lightweight billion-scale vector search for multimodal AI

- Build AI-powered data pipeline without vector databases

Data analysis in special contexts

This section deals with the application of data analysis in specific and sometimes unconventional contexts. Topics range from time zone handling with Polars to climate data analysis with Xclim. Of particular interest are the insights into streaming data and serverless systems, which open up new horizons in data persistence and processing.

Sessions:

- Polars and time zones: everything you need to know

- How to build a data pipeline without data: Synthetic data generation and testing with Python

- Data Tales from an Open Source Research Team

- Real-Time Revolution: Kickstarting Your Journey in Streaming Data

- Blazing fast I/O of data in the cloud with Daft Dataframes

- High speed data from the Lakehouse to DataFrames with Apache Arrow

- Production Data to the Model: “Are You Getting My Drift?”

- Unified batch and stream processing in python

- Data Harvest: Unlocking Insights with Python Web Scraping

- Data persistence with consistency and performance in a truly serverless system

- IID Got You Down? Resample Time Series Like A Pro

- Kùzu: A Graph Database Management System for Python Graph Data Science

- Xclim: Climate Data Processing and Analysis for Everyone

Topic 2: Machine Learning

Experiment management and evaluation in machine learning

The focus here is on the management and evaluation of ML experiments. Sessions deal with the use of versioning tools such as Git for ML, problems in model training and ML toolboxes such as sktime. It is about the balance between technical sophistication and pragmatic applicability in ML.

Sessions:

- Version Control and Beyond: Leveraging Git for ML Experiment Management

- Paradoxes in model training and evaluation under constraints

- sktime – python toolbox for time series: new features 2023 – advanced pipelines, probabilistic forecasting, parallelism support, composable classifiers and distances, reproducibility features

Innovative approaches and tools in machine learning

This category shows innovative methods and tools in ML. Topics include the improvement of data quality, the application of Gaussian processes, the optimization of scikit-learn classifiers, and the design of ML systems for the real-time world. Of particular interest is the integration of local LLMs and code snippets in JupyterLab.

Sessions:

- Improving Open Data Quality using Python

- But what is a Gaussian process? Regression while knowing how certain you are

- Enhancing your JupyterLab Developer Experience with Local LLMs and Code Snippets

- Get the best from your scikit-learn classifier: trusted probabilties and optimal binary decision

- Unraveling Hidden Technical Debt in ML: A Pythonic Approach to Robust Systems

- DDataflow: An open-source end to end testing from machine learning pipelines

- Event-Driven Data Science: Reconceptualizing Machine Learning for the Real-time World

Challenges in Machine Learning

High-end application areas and challenges in ML are examined here. Topics range from developing robust AI pipelines with Hugging Face and Kedro to frameworks for machine unlearning. Other highlights include maximizing GPU usage for model training and understanding and bridging classic ML pipelines and LLMs.

Sessions:

- Who needs ChatGPT? Rock solid AI pipelines with Hugging Face and Kedro

- Customizing and Evaluating LLMs, an Ops Perspective

- How can a learnt ML model unlearn something: Framework for “Machine Unlearning”

- Maximize GPU Utilization for Model Training

- Real Time Machine Learning

- Tricking Neural Networks : Explore Adversarial Attacks

- Bridging Classic ML Pipelines with the World of LLMs

- Compute anything with Metaflow

- Full-stack Machine Learning and Generative AI for Data Scientists

- Predictive survival analysis with scikit-learn, scikit-survival and lifelines

- sktime – the saga. Trials and tribulations of a charitable, openly governed open source project

- Modeling Extreme Events with PyMC

- Introduction to Machine Learning Pipelines: How to Prevent Data Leakage and Build Efficient Workflows

Topic 3: General Track

Extension and optimization of tools and methods

In this area, the focus is on expanding and optimizing existing tools and methods. From exploiting the full potential of Jupyter notebooks to developing on-demand logistics apps with Python, it’s all about innovative applications and improvements in the handling of data and software. Topics such as the fight against money laundering with Python and life in a, attention: _lognormal_ world offer exciting insights into special specialist worlds.

Sessions:

- Unlock the Full Potential of Jupyter Notebooks

- When Design Thinking Meets Opensource

- More like this: monitoring recommender systems in production

- Data Observability is Key: A Hands-on Comparison of Open Source Data Catalog Tools

- Fighting Money Laundering with Python and Open Source Software

- Cloud UX for Data People

- Extremes, outliers, and GOATS: on life in a lognormal world

- Map of Open-Source Science (MOSS)

- VocalPy: a core Python package for acoustic communication research

- Intake 2

- The Hell, According to a Data Scientist

- Order up! How do I deliver it? Build on-demand logistics apps with Python, OR-Tools, and DecisionOps

- Getting better at Pokémon using data, Python, and ChatGPT.

Integration and application of new technologies

This category shows how new technologies can be integrated into existing systems and used efficiently. It is about building bridges between theory and practice in investment portfolios, improving runtime reproducibility in the Python ecosystem, and using Julia in decentralization. Also of interest is the use of Python for interactive data science and the development of workflows for acoustic fisheries surveys.

Sessions:

- Python-Driven Portfolios: Bridging Theory and Practice for Efficient Investments

- FawltyDeps: Finding undeclared and unused dependencies in your notebooks and projects

- The Internet’s Best Experiment Yet

- Xorbits Inference: Model Serving Made Easy

- Introduction to Using Julia for Decentralization by a Quant

- HPC in the cloud

- Architecting Data Tools: A Roadmap for Turning Theory and Data Projects into Python Packages

- Ensuring Runtime Reproducibility in the Python Ecosystem

- Prefect Workflows for Scaling Acoustic Fisheries Survey Pipelines

- Collaborate with your team using data science notebooks

- Python as a Hackable Language for Interactive Data Science

- Quarto dashboards

- Keras (3) for the Curious and Creative

- Hands-On Network Science

- NonlinearSolve.jl: how compiler smarts can help improve the performance of numerical methods

Topic 4: Visualization

Development of interactive data visualization apps

The focus is on the development of interactive and data visualization apps with good usability. The sessions will cover everything from mobile app creation to complex dashboards and interactive network graphics. The focus will be on technologies such as Streamlit and Shiny, which simplify the creation of animated reports and dashboards. Aesthetics and functionality must work together so that we can present the data in an understandable way, no matter how complex the relationships may be.

Sessions:

- Build a Data Visualization App For Your Phone

- Building Interactive, Animated Reports and Dashboards in Streamlit with ipyvizzu

- Solara simplifies building complex dashboards.

- From raw data to interactive data app in an hour: Powered by Python

- Building an Interactive Network Graph to Understand Communities

Simplify data exploration

Here, important data exploration is improved by visual means. New approaches show how complex data sets can be explored intuitively and interactively. To do this, complex data relationships need to be visualized in an understandable way. Sessions such as understanding reactive execution in Shiny or building interactive network graphs offer practical insights into the advanced applications of visualization technologies.

Sessions:

- Empowering Data Exploration: Creating Interactive, Animated Reports in Streamlit with ipyvizzu

- Understanding reactive execution in Shiny

Topic 5: Large Language Models

This is what we’ve been waiting for. Of course, there is no Data/AI conference without LLMs.

Development and use of Large Language Models (LLMs)

This track focuses on the practical application and development of Large Language Models (LLMs). From creating context-aware chatbots to training large-scale models with PyTorch, these sessions cover a broad spectrum. The aim is to push the boundaries of LLMs and use them effectively in practice. Topics such as accelerating document deduplication for training LLMs and the productionization of open-source LLMs offer deep insights into the current challenges and solutions in this fast-growing field.

Sessions:

- Building Contextual ChatBot using LLMs, Vector Databases and Python

- Accelerating fuzzy document deduplication to improve LLM training with RAPIDS and Dask

- LLMs: Beyond the Hype – A Practical Journey to Scale

- Producing Open Source LLMs

- Leveraging open-source LLMs for production

- From RAGs to riches: Build an AI document interrogation app in 30 mins

- Training large scale models using PyTorch

Advanced applications and business benefits of LLMs

In this category, the focus is on the advanced applications of LLMs and their potential business benefits. Approaches are presented to use LLMs for specific applications such as improving search engines and learning-to-rank models. In addition, generative AI workflows enable better results in the company.

Sessions:

- Building Learning to Rank models for search using Large Language Models

- Using Large Language Models to improve your Search Engine

- Orchestrating Generative AI Workflows to Deliver Business Value

Conclusion: The data topics of the future

With this impressive selection of sessions ranging from the intricacies of data processing to novel applications of Large Language Models, this conference is a veritable treasure trove of knowledge. The sessions offer insights into the cutting edge of technological development. It also brings together an inspiring community of like-minded people who are shaping the future of data & artificial intelligence together.