The breakthrough for open source AI has arrived: OLMo 2 32B sets new standards in performance and efficiency.

The Allen Institute for AI (AI2) has unveiled OLMo 2 32B, a new large language model that marks a significant advance in the world of open AI models. It is the first fully open model to outperform GPT-3.5-Turbo and GPT-4o mini in a series of academic benchmark tests. It requires only a fraction of the computing power of comparable models – a clear sign that open source AI is entering a new phase.

The importance of this development can hardly be overestimated: OLMo 2 32B reduces the gap between open and closed AI systems to around 18 months. The complete transparency of the project, with all data, code, weightings and training details freely available, also encourages scientific collaboration and innovation across the industry.

Technical superiority through innovative training methods

With its 32 billion parameters, OLMo 2 32B was trained on up to 6 trillion tokens. The training process included pre-training on OLMo-Mix-1124 (3.9 trillion tokens) and mid-training on Dolmino-Mix-1124. This specific training methodology has enabled the model to achieve comparable performance at only a third of the training cost of Qwen 2.5 32B.

The training infrastructure is particularly impressive: the model was trained on Augusta, a 160-node AI hypercomputer from Google Cloud Engine, which achieved processing speeds of over 1,800 tokens per second per GPU. This technical efficiency makes OLMo 2 32B not only a powerful model, but also an economically attractive one for researchers and developers.

Democratization of advanced AI technology

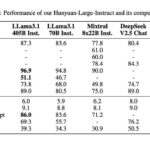

OLMo 2 32B represents a paradigm shift in AI research. The model is integrated into the Hugging Face Transformers library and the main branch of vLLM, ensuring wide availability. It surpasses or matches the capabilities of models such as GPT-3.5 Turbo, GPT4-o mini, Qwen 2.5 32B and Mistral 24B, while approaching the performance of larger models such as Qwen 2.5 72B and Llama 3.1 and 3.3 70B.

The team’s future plans include improving the model’s reasoning ability and enhancing its ability to process longer texts. This suggests that OLMo 2 32B is just the beginning of a new generation of open AI models that could fundamentally change the landscape of artificial intelligence.

Summary

- OLMo 2 32B is the first fully open model to outperform GPT-3.5-Turbo and GPT-4o mini in academic benchmark tests

- The model achieves its performance with only one third of the training cost of comparable models

- With 32 billion parameters, it has been trained on up to 6 trillion tokens

- Full transparency (data, code, weightings, training details) encourages collaboration in AI research

- The gap between open and closed AI systems is reduced to around 18 months

- OLMo 2 32B is integrated into Hugging Face Transformers and vLLM

- Future developments will focus on logical thinking and processing longer texts

Source: Ai2