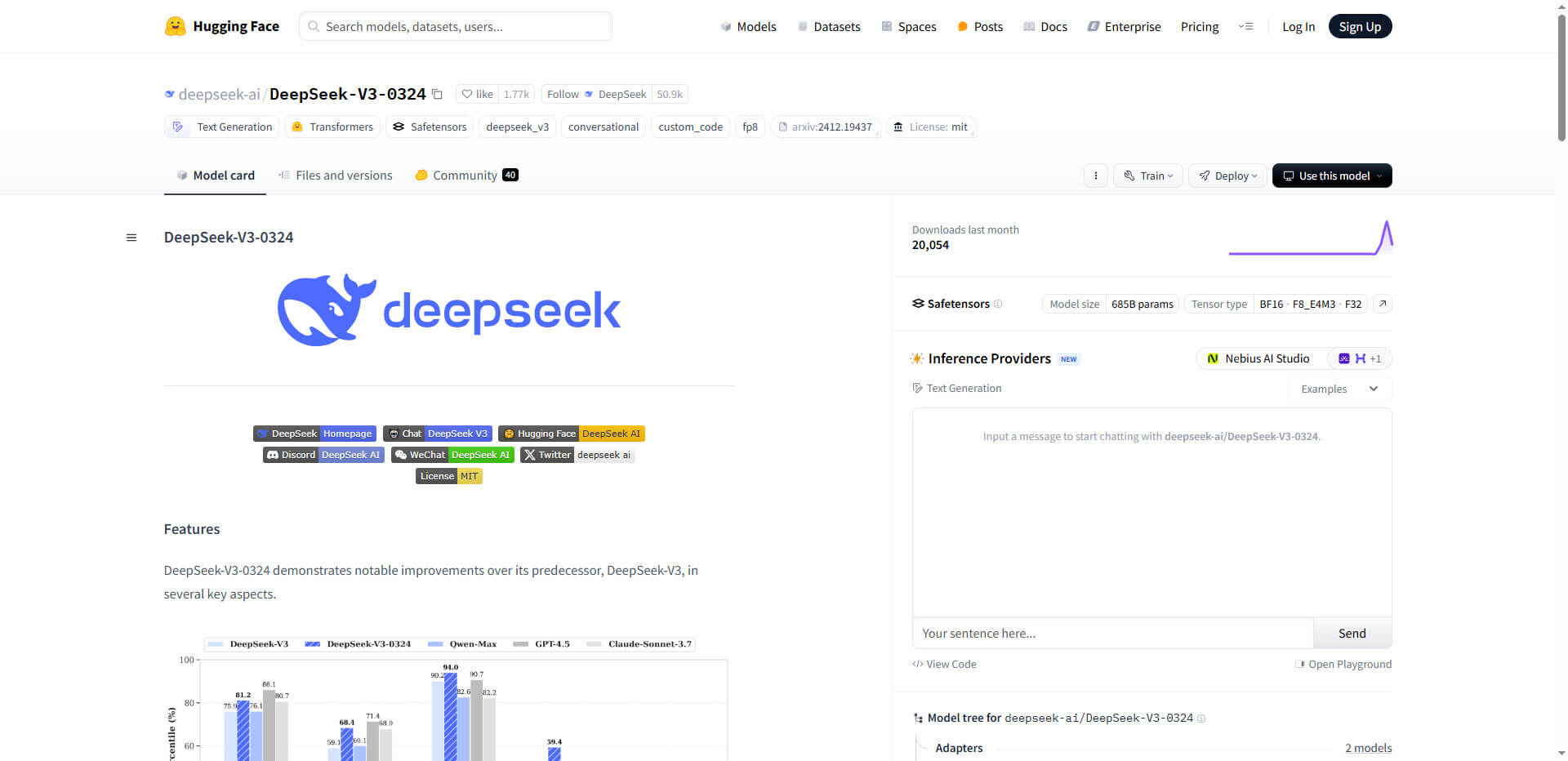

The new DeepSeek-V3-0324 represents a significant advance in the field of open source artificial intelligence. With a total of 685 billion parameters, this language assistant significantly outperforms previous models and sets new standards for the performance of open source AI.

The technology developed by DeepSeek AI uses an advanced Mixture-of-Experts (MoE) architecture that activates only 37 billion parameters per token. This enables efficient processing of complex queries with reduced resource requirements. The training phase involved an impressive 14.8 trillion tokens and required 2.78 million H800 GPU hours – an investment volume that demonstrates the company’s determination to compete with proprietary solutions from large tech groups.

Technical innovations and performance improvements

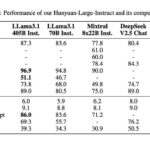

The benchmark results speak for themselves: compared to the previous model, DeepSeek-V3-0324 improved by 5.3 points to 81.2 in the MMLU-Pro test and by an impressive 9.3 points to 68.4 in the GPQA. The increase in the AIME medical test is particularly noteworthy, rising by 19.8 points to 59.4. This indicates a significantly improved understanding of medical contexts.

One of the model’s outstanding capabilities is code generation. Tests show that DeepSeek-V3-0324 can generate error-free code up to 700 lines long – a performance that rivals expensive proprietary solutions. The ability to generate stylistically consistent and readable code, known as “vibe coding”, makes the model particularly valuable for development teams.

Practical applications

DeepSeek-V3-0324 can be used in numerous industries:

- Financial sector: Complex analysis and risk assessment

- Healthcare: Medical research support and diagnostic aids

- Software development: Automated code generation and error analysis

- Telecommunications: Optimization of network architectures

The model is available via various frameworks such as SGLang (for NVIDIA/AMD GPUs), LMDeploy and TensorRT-LLM. In addition, quantized versions with 1.78 to 4.5 bit GGUF formats have been published, which enable local use even on less powerful hardware.

Summary

- DeepSeek-V3-0324 is an open source AI model with 685 billion parameters under MIT license

- Mixture-of-Experts architecture enables only 37 billion parameters per token for efficient processing

- Significant performance improvements in benchmark tests such as MMLU-Pro ( 5.3 points) and GPQA ( 9.3 points)

- Multi-head latent attention and improved load-balancing strategies enable superior reasoning capabilities

- Support for multiple inference frameworks (SGLang, LMDeploy, TRT-LLM) for flexible deployment options

- Excellent code generation with up to 700 error-free lines of code

- Open availability via the Hugging Face platform without commercial restrictions

Source: Hugging Face