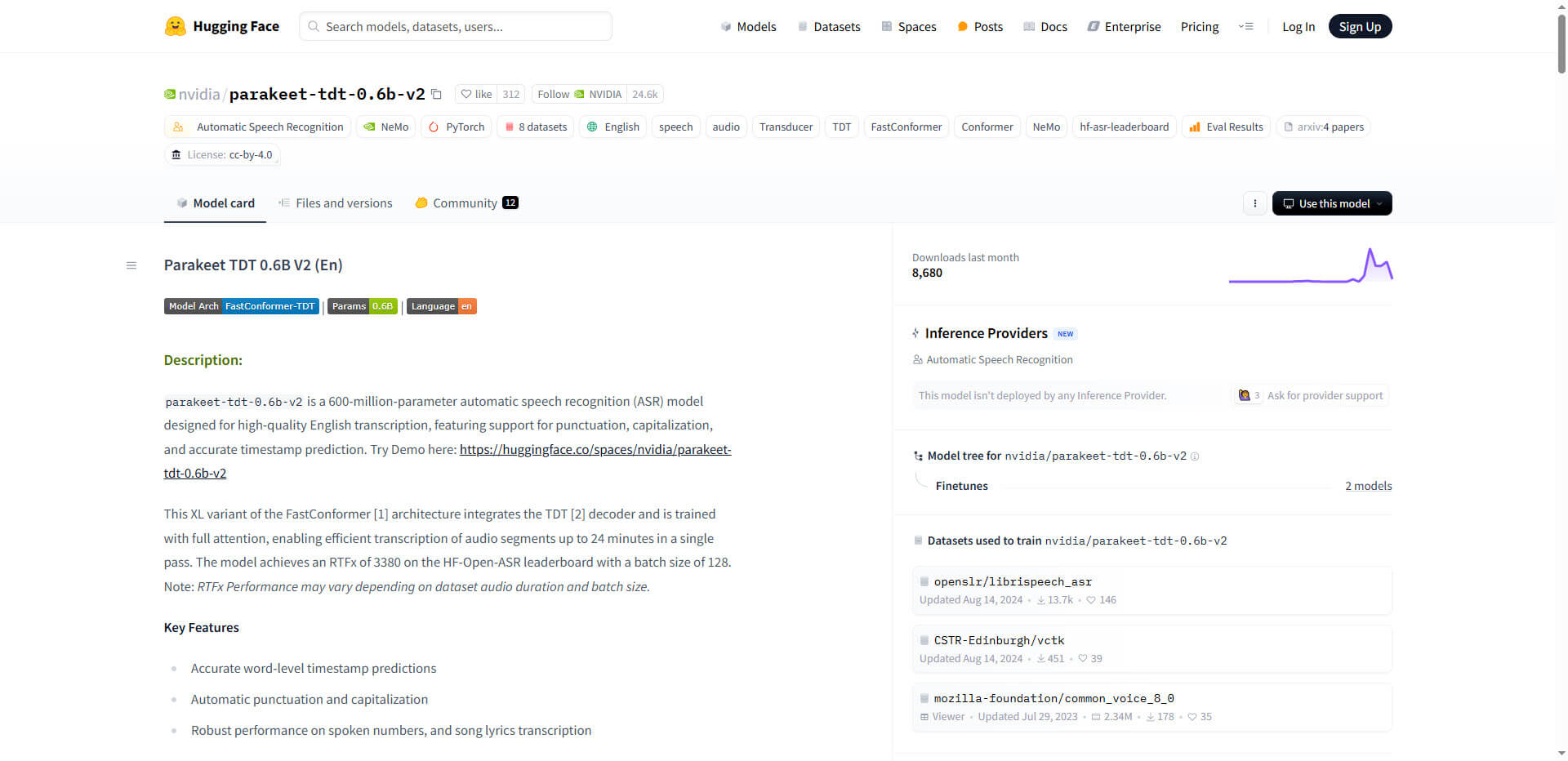

The new NVIDIA Parakeet-TDT-0.6B-V2 model outperforms larger competitors with only 600 million parameters and sets new standards for automatic speech recognition.

The field of Automatic Speech Recognition (ASR) is currently experiencing a significant advancement with NVIDIA’s latest innovation. The Parakeet-TDT-0.6B-V2 model combines a breakthrough architecture with unprecedented computational efficiency, achieving an accuracy that surpasses even larger models such as Whisper-large-v3 (1.6 billion parameters) despite its compact size of only 600 million parameters. This development is particularly noteworthy as it makes automatic speech recognition both more powerful and more resource-efficient.

The core of the innovation lies in the hybrid FastConformer-TDT architecture, which combines an optimized FastConformer encoder with a Token-and-Duration Transducer (TDT) decoder. This approach reduces decoding latency by an impressive 64% compared to conventional methods while improving accuracy. Of particular note is the model’s ability to process up to 24 minutes of audio while accurately predicting punctuation, capitalization and timestamps.

Impressive performance through innovative training

The Parakeet model was trained on the extensive Granary dataset of 120,000 hours of audio material, which includes both manually transcribed and pseudo-labeled data from various sources. This diversity in the training process results in exceptional robustness to different speech styles, accents and background noise.

In performance comparisons, the model shows impressive results: On the LibriSpeech-clean dataset, it achieves a word error rate (WER) of just 1.69%, an improvement of 38% over Whisper-large-v3. Even under difficult conditions such as telephone quality (6.32% WER) or noisy environments (8.39% WER at 5dB SNR), performance remains stable and clearly outperforms competitor models.

The computing efficiency of the model is particularly remarkable: with a real-time factor (RTFx) of 3380 on NVIDIA A100 GPUs, 10 minutes of audio can be processed in less than 0.2 seconds. The low memory requirement of just 2.1GB VRAM also enables use on edge devices such as the NVIDIA Jetson Orin platform.

Practical applications and future prospects

The Parakeet-TDT-0.6B-V2 model is ideal for real-time applications such as live subtitling, transcription of meetings and telephone calls as well as for voice control of devices. Implementation in existing systems is facilitated by the optimized inference pipeline, which works with the NVIDIA Triton Inference Server and TensorRT optimizations for specific GPU architectures.

The architecture of the model also opens up promising research directions: Initial experiments on cross-lingual transfer learning show a WER reduction of 12% when adapting to German with only 50 hours of target data. Multimodal approaches that integrate visual speech recognition could also reduce the WER in noisy video environments by a further 19%.

Summary:

- NVIDIA Parakeet-TDT-0.6B-V2 outperforms larger models such as Whisper-large-v3 (1.6B) with only 600 million parameters

- Innovative FastConformer-TDT architecture reduces decoding latency by 64% and improves accuracy

- Training on the 120,000-hour Granary dataset ensures robustness across different language styles and environments

- Exceptional efficiency: 3380x real-time factor on A100 GPUs and only 2.1GB VRAM requirement

- Promising applications in real-time transcription, multilingual adaptation and multimodal speech recognition

Source: Hugging Face