With its M2 model, MiniMax has released an open source language model that achieves comparable performance at only 8 percent of the cost of Claude Sonnet and works twice as fast.

The Chinese AI start-up MiniMax is positioning itself as a serious competitor in the global AI market with the release of MiniMax M2. The new language model achieved 5th place worldwide in the Artificial Analysis Benchmarks, outperforming all other available open source models. The special feature lies in the mixture-of-experts architecture with 230 billion total parameters, of which only 10 billion are activated per token.

The API pricing of 0.30 dollars per million input tokens and 1.20 dollars per million output tokens drastically undercuts Claude Sonnet, which costs 3.00 dollars and 15.00 dollars respectively. At the same time, MiniMax M2 achieves an inference speed of around 1,500 tokens per second, while Claude Sonnet only manages 900 tokens per second. This combination of low cost and high performance makes the model particularly attractive for agent workflows and code generation.

The company was founded in 2021 by former SenseTime employees and has already made a name for itself with successful consumer products such as the Talkie app, which reaches 11 million monthly active users worldwide. NVIDIA CEO Jensen Huang recently praised MiniMax as one of the world’s leading AI innovators and spent two hours in direct conversation with founder Yan Junjie.

Technical superiority through efficient architecture

The technical foundation of MiniMax M2 is based on a highly optimized Mixture-of-Experts implementation that is significantly more efficient than comparable models. For example, while DeepSeeks V3.2 uses 37 billion active parameters, MiniMax M2 uses only 10 billion and still achieves comparable or better performance. This efficiency enables responsive agent loops and higher parallel processing with the same computing budget.

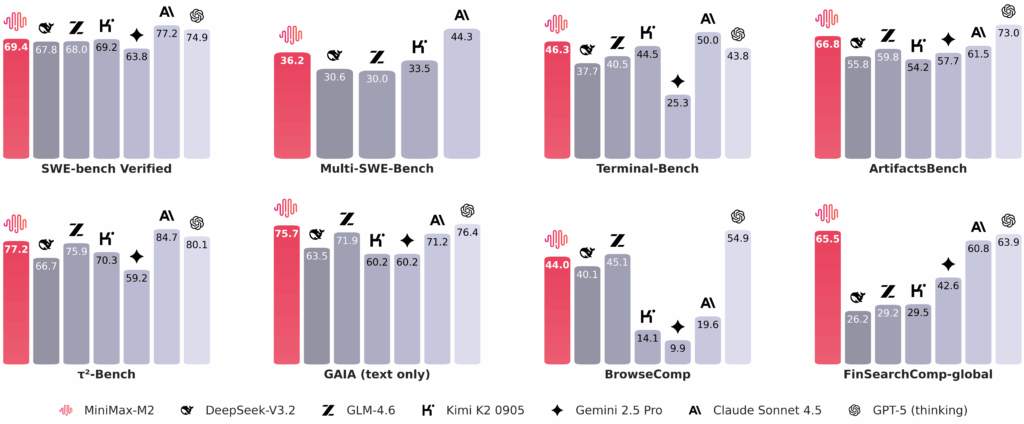

The model shows its strengths particularly in code-specific benchmarks. On SWE-Bench Verified, MiniMax M2 achieves a score of 69.4 percent, outperforming GLM-4.6 with 68 percent. On Terminal-Bench, which tests the ability to execute command line tasks, the model scores 46.3 percent and outperforms both GPT-5 and Claude Sonnet 4.5. This performance makes MiniMax M2 particularly valuable for software development and automated programming tasks.

Read also: Xcode 26.3: Agentic Coding with Claude & Codex

Strategic impact on the global AI market

Full open-source availability under MIT license via Hugging Face democratizes access to frontier-class AI capabilities. Organizations can run the model locally, meeting privacy requirements without relying on external APIs. This strategy contrasts strongly with the proprietary orientation of OpenAI and Anthropic and could fundamentally challenge their business models.

China invested around 132.7 billion dollars in AI development between 2019 and 2023, which explains the simultaneous emergence of several frontier-class models such as DeepSeek R1, Qwen 2.5-Max and GLM-4.5. This efficiency-driven development was partly driven by US export restrictions on advanced semiconductors, which forced Chinese developers to optimize algorithms and architecture instead of simply scaling parameters and training data.

The most important facts about the update

- MiniMax M2 achieves 5th place worldwide in AI benchmarks as the best open source model

- Cost advantage of 92 percent compared to Claude Sonnet with comparable performance

- Double the inference speed with 1,500 tokens/second vs. 900 for Claude

- Mixture-of-Experts architecture with 230B total parameters, only 10B active

- Fully open source under MIT license available via Hugging Face

- Specialization in agent workflows and code generation

- IPO plans for 2025 as the first Chinese “AI Tiger” startup

- Recognizedby NVIDIA CEO Jensen Huang as a global AI innovation leader

Executive Summary

MiniMax has developed M2, an open-source language model that achieves frontier-level performance at significantly reduced cost and higher speed. The Mixture-of-Experts architecture with only 10 billion active parameters enables exceptional efficiency for agent workflows and code generation. Full open-source availability democratizes access to advanced AI capabilities and challenges established proprietary models. China’s efficiency-driven development approach has led to the simultaneous emergence of several competitive models that are gaining international recognition and reshaping the global AI landscape.

Source: MiniMax