The AI hardware start-up Groq has created special chips that accelerate common language models to an extremely high degree. This can now be tested for everyone with the popular Mixtral, Mistral and Llama 2 models on the Groq website.

Groq in the test: 10-20 times faster GenAI models

In the test, we asked the Mixtral model “8x7B 32K” to create a marketing concept. As a result, the model generated 500 words (3,700 characters), with Mixtral returning the result with almost no waiting time. After only 4 seconds the concept was completely generated. The results are returned live.

Groq can be experienced live here without registration (tip: do it!)

Result: The marketing concept generated by Mixtral

Groq’s performance with the Mixtral model: 1.7 seconds for 500 words

Although Groq needed a total of 4 seconds, this also includes waiting times due to parallel use by other users and latency times on the Internet. In fact, the model only needed 1.7 seconds to generate the 500 words (see Inference Time, broken down by the duration of processing the input prompt and the text output). This corresponds to a throughput of an incredible 840 tokens per second (approx. 20x faster than ChatGPT)

The advantages of accelerated GenAI models

The advantages are obvious: a fast LLM enables significantly faster text creation, translations, code generation or simply research. We estimate that productivity can be increased by a factor of 2-3 for typical tasks. The advantages of code-based use of LLMs via API are much greater, as the full throughput of 500 tokens per second can be exploited here.

Which language models does Groq support?

The following open source AI models can currently be tested directly on the Groq website free of charge and without registration:

- Mixtral 8x7B 32K (considered “almost as good” as GPT-4)

- Mistral 7B 8K (roughly comparable to GPT-3.5-Turbo)

- Llama 2 70B 4K (Meta’s OpenSource model)

Groq has thus made some of the currently most promising open source LLMs even better. These exceed the speed of GPT-4 (“the current leading language model”) by far.

However, Groq is not limited to these models, but can in principle accelerate all models and also games, as the optimized chips have a high throughput and low latency due to their design.

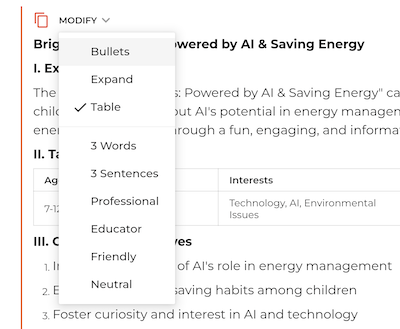

Another feature on the Groq website: Output results as a table

If you want to use the powerful and accelerated text AIs free of charge, you can even discover another practical feature on the Groq website: You can quickly adjust the output tonality, have it summarized in 3 sentences or especially handy: reformat it as a table.

Example: Output of the generated content as tables

Conclusion and outlook: AI will be extremely fast in 2024

Groq makes working with AI even better, because who hasn’t experienced it: AI often doesn’t return the desired result. With Groq acceleration, this is no longer a problem, as the generated text can be corrected and refined in just a few seconds.

OpenAI is also working in parallel to accelerate its popular language model using chips produced in-house. And NVIDIA is also continuously working on increasing the performance of its leading AI chips. It is expected that the popular ChatGPT and Microsoft’s Copilot will be just as fast by 2024.

The AI race has literally picked up speed once again thanks to technologies such as Groq, which will significantly drive the adaptation and use of AI in everyday life.

Find out more: