Another week has passed and once again there was exciting news in the field of AI. This week: Google Lumiere, MidJourney V6 update and Google Chrome.

OpenAI’s ChatGPT beta: A leap towards multifunctional AI assistants

OpenAI has introduced an innovative beta feature for ChatGPT that allows multiple GPTs to be integrated into a single chat window. By using the “@” sign, users can address specific GPTs who will respond interactively. This feature demonstrates OpenAI’s drive to develop ChatGPT into a versatile personal assistant. The feature allows different GPT personalities, such as replica chatbots of Donald Trump and Joe Biden, to interact with each other.

| Key point | Details |

|---|---|

| Function | Beta feature in ChatGPT to integrate multiple GPTs in one chat window |

| Mechanism | Activation of specific GPTs using the “@” sign |

| Example | Interaction between simulated chatbots (e.g. Donald Trump and Joe Biden) |

| Goal of OpenAI | Development of ChatGPT into a universal, personalized assistant |

Link: https://the-decoder.de/chatgpts-neueste-funktion-ist-openais-naechster-schritt-hin-zum-allzweck-assistenten/

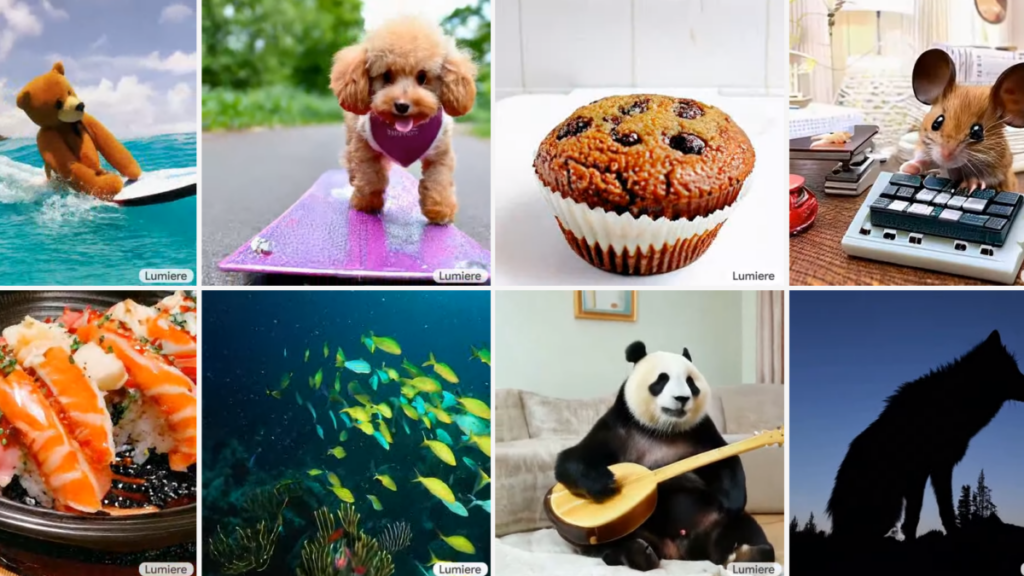

Lumiere by Google: Breakthrough in generative AI for realistic videos

Google presents Lumiere, an innovative text-to-video (T2V) diffusion model that sets new standards in video creation. With its unique Space-Time U-Net (STUNet) architecture, Lumiere creates videos with coherent motion and high quality. Unlike previous models, which were based on a model cascade, Lumiere generates the entire video sequence at once, resulting in more realistic movements. The model has been trained with 30 million videos and shows impressive results compared to other methods.

| Key point | Details of the |

|---|---|

| Project | Lumiere from Google |

| Model type | Text-to-video (T2V) diffusion model |

| Special feature | Space-Time U-Net (STUNet) architecture for coherent movements and high quality |

| Training | 30 million videos with associated text subtitles |

| Video properties | 80 frames at 16 frames per second, 5 second videos |

Link: https://the-decoder.de/lumiere-google-zeigt-neue-generative-ki-fuer-realistische-videos/

Midjourney’s V6 update: New dimensions in AI-driven image processing

Midjourney has released its V6 update, which introduces the pan, zoom and vary (region) functions. These functions allow for improved image editing with more coherence and less repetition. The Pan function combines panning and zooming and is compatible with Upscale, Vary (Region) and Remix. The update also makes Midjourney’s Alpha website, which enables image generation, more accessible to users who have created at least 5000 images. In addition, there is a new feedback function to optimize development work.

Key points table

| Key point | Key point details |

|---|---|

| Update | Midjourneys V6 update with new image processing functions |

| New functions | Pan, zoom and vary (region) for improved image composition |

| Pan function | Combination of pan and zoom, compatible with Upscale, Vary (Region) and Remix |

| Website access | Extended accessibility of the Alpha website for active users |

| Feedback feature | New feature to improve development work based on user feedback |

Link: https://the-decoder.de/midjourneys-v6-update-bringt-pan-zoom-vary-und-breiteren-website-zugang/

Meta-prompting: A new era in the efficiency of large language models

Meta-prompting, developed by researchers at Stanford University and OpenAI, is an innovative technique that improves the performance of large language models on logical tasks. It works by breaking down complex tasks into smaller, more manageable parts and processing them with specialized “expert” models. In experiments with GPT-4, this approach has achieved better results than conventional prompting methods, especially for logical challenges.

| Key point | Details |

|---|---|

| Development | By researchers at Stanford University and OpenAI |

| Method | Meta-prompting to improve the performance of large language models |

| Function | Decomposition of complex tasks into smaller parts for expert models |

| Area of application | Particularly effective for logical tasks |

| Results | Outperforms conventional prompting methods in experiments with GPT-4 |

Link: https://the-decoder.de/meta-prompting-kann-die-logik-leistung-grosser-sprachmodelle-verbessern/

Longer thought processes: A key to improving language models

A study reveals that “chain of thought” prompts can significantly improve the performance of large language models such as GPT-4, even when they contain erroneous information. This method improves the reasoning ability of the models by breaking down complex problems into more detailed steps. Surprisingly, the results show that the length of the thought chains is more important than the exact correctness of each individual step.

| Key point | Details |

|---|---|

| Study finding | Longer chain-of-thoughts improve language models |

| Influence | Longer chains of thought more important than accuracy of steps |

| Application | Particularly effective for complex problem solving |

| Models | Effective with large language models such as GPT-4 |

Link: https://the-decoder.de/prompt-engineering-laengere-chain-of-thoughts-verbessern-die-leistung-von-sprachmodellen/

Google’s Gemini-Pro: A new era for Bard at GPT-4 level

Google has unveiled a new, more powerful Gemini Pro model for its chatbot Bard, which is on par with GPT-4 in human evaluation. The model immediately took second place in the neutral benchmark of the Chatbot Arena, just behind the GPT-4 Turbo. Google is also planning to release Gemini Ultra soon, which is set to surpass Gemini Pro-Scale in terms of performance.

| Key point | Key details |

|---|---|

| Model | Gemini-Pro for Google’s Bard |

| Performance | Comparable with GPT-4 |

| Ranking | Second place in the chatbot arena |

| Future update | Introduction of Gemini Ultra planned |

Link: https://the-decoder.de/google-veroeffentlicht-neues-bard-gemini-modell-das-auf-gpt-4-niveau-liegen-koennte/

OpenAI’s GPT-4: More powerful and less expensive

OpenAI has improved its GPT-4 model (gpt-4-0125-preview), which works more efficiently and reduces the so-called “laziness” that manifested itself in incomplete answers. OpenAI is also lowering prices for the GPT 3.5 Turbo model and introducing two new embedding models: text-embedding-3-small and text-embedding-3-large. New API key management tools give developers more control and insight into API usage.

| Key Point | Details |

|---|---|

| Model improvement | GPT-4 (gpt-4-0125-preview), more efficient and with reduced “laziness” |

| Price reduction | Prices for GPT-3.5 turbo model reduced |

| New embedding models | Text-embedding-3-small and text-embedding-3-large |

| API management tools | New tools for better control and overview of API usage |

Link: https://the-decoder.de/openai-stellt-verbessertes-gpt-4-modell-vor-und-senkt-die-api-preise/

Nvidia’s RTX Video HDR: Revolutionizes SDR-to-HDR video conversion

Nvidia introduces RTX Video HDR, an impressive AI solution that converts Standard Dynamic Range (SDR) video to High Dynamic Range (HDR) video. This tool works in combination with RTX Video Super Resolution and requires an HDR10-compatible monitor for HDR functionality. It is available in Chromium-based browsers and requires the January Studio driver and activation of Windows HDR features.

| Key point | Details |

|---|---|

| Product details | Nvidia RTX Video HDR |

| Feature | Converts SDR videos into HDR videos |

| Compatibility | Requires HDR10 compatible monitor |

| Availability | In Chromium-based browsers |

| Additional requirements | January Studio drivers and Windows HDR capabilities |

Link: https://the-decoder.de/nvidia-rtx-video-hdr-wandelt-sdr-videos-mit-ki-in-hdr-videos-um/

Google Ads and Gemini chatbot: Revolutionizing the creation of search campaigns

Google Ads has integrated the advanced AI model Gemini to optimize the creation of search campaigns through a chat-based workflow. This feature, which is currently available in the US and UK for English-speaking users, allows ad content, including ads and keywords, to be designed more efficiently. Small businesses in particular benefit from this innovation by increasing their ad quality by 42%. In the near future, the feature will also suggest AI-generated images, complete with watermarks and metadata.

| Key point | Details |

|---|---|

| Integration of | Gemini AI model in Google Ads |

| Feature | Chat-based creation of search campaigns |

| Availability | Currently for English-speaking users in the US and UK |

| Benefit | 42% increase in ad quality for small businesses |

| Future feature | Integration of AI-generated images with watermarks and metadata |

Link: https://the-decoder.de/mit-google-ads-kann-man-jetzt-suchkampagnen-mit-einem-gemini-chatbot-erstellen/

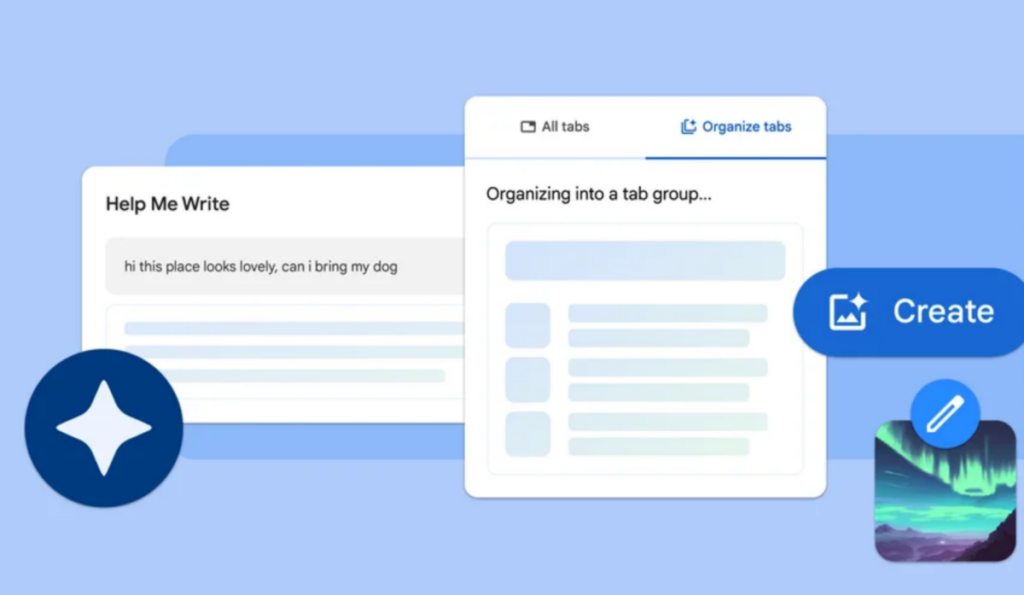

Chrome’s AI revolution: Tab auto-sorting and typing help for more efficient browsing

Google Chrome introduces three new AI features with the latest update (M121): the Tab Organizer, AI-generated themes and a typing assistant. The Tab Organizer makes it easier to manage tabs by automatically grouping them based on content, while the AI-generated themes enable individual browser themes. The “Help me write” function supports users in composing texts by providing AI-generated suggestions. These innovations will significantly improve the user experience and efficiency when browsing the internet.

| Key point | Details |

|---|---|

| Chrome update | M121 with new AI functions |

| Tab Organizer | Automatic grouping of tabs based on content |

| AI-generated designs | Customized browser themes |

| Writing assistant | “Help me write” function for AI-generated text suggestions |

Link: https://the-decoder.de/ki-updates-fuer-chrome-bringen-auto-sortierung-fuer-tabs-und-schreibhilfen/

Google aims to lead AI development in 2024 – but there is still a long way to go

Google has set itself the goal of developing the world’s most advanced, safe and responsible AI by 2024. This includes integrating AI into existing products such as business applications, Pixel smartphones and generative search, but the company has yet to develop a successful standalone AI product such as ChatGPT. Losing business in the cloud space to Microsoft, which is growing faster thanks to its collaboration with OpenAI, and pressure on the quality of Google search from AI spam are challenges along the way.

| Key point | Details |

|---|---|

| Objective | Developing the world’s most advanced AI |

| Product integration | AI in existing products such as business applications, Pixel smartphones |

| Cloud business | Loss to Microsoft due to OpenAI cooperation |

| Search quality | Under pressure from AI spam |

Link: https://the-decoder.com/google-aims-to-deliver-worlds-most-advanced-ai-in-2024-and-it-certainly-has-a-long-way-to-go/

RunwayML’s innovation: From images to videos with the Multi-Motion Brush

RunwayML revolutionizes video editing with the Multi-Motion Brush, a tool that turns static images into animated videos. Users can individually animate up to five objects in an image, unleashing new dimensions of creativity. This technological innovation is user-friendly and significantly expands the possibilities in the field of visual content.

Key points table

| Key point | Key point details |

|---|---|

| Tool | Multi-Motion Brush from RunwayML |

| Function | Transforms static images into animated videos |

| Object animation | Up to five objects per image can be animated |

| Ease of use | Intuitive operation for a wide range of applications |

Link: https://www.analyticsvidhya.com/blog/2024/01/runwayml-introduces-a-multi-motion-brush-to-turn-images-into-videos/