Meta’s Llama 4 sets new standards in artificial intelligence with its innovative mixture-of-experts architecture, native multimodality and extended context windows.

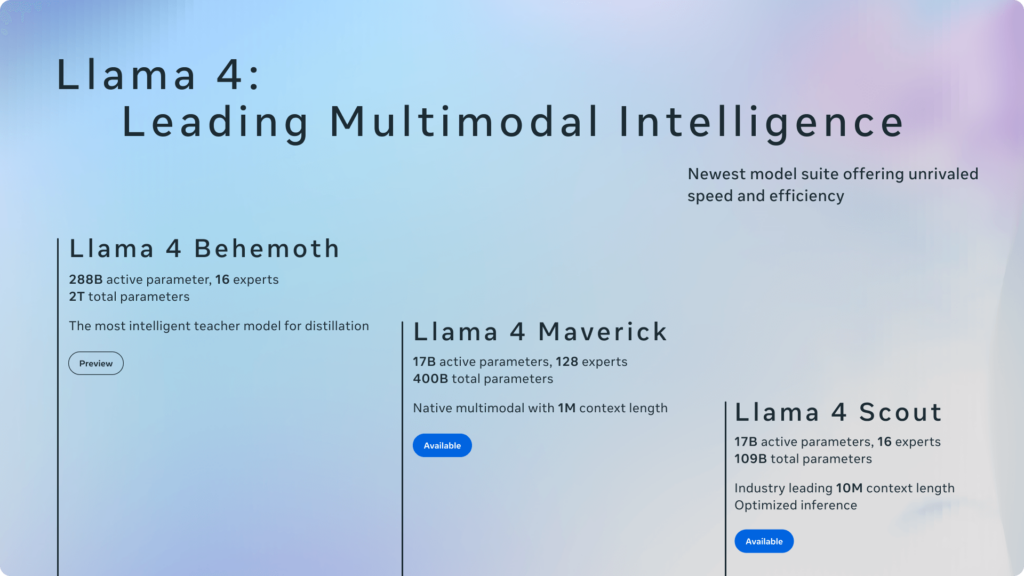

Meta’s latest AI family – consisting of the Scout, Maverick and as yet unreleased Behemoth models – addresses three key challenges of modern AI systems: computational efficiency, multimodal processing and context limitations. With a revolutionary Mixture-of-Experts (MoE) architecture, Llama 4 activates only 2-5% of its parameters per token, drastically reducing computational costs while keeping up with significantly larger models in terms of performance.

The Maverick variant manages to activate only 17 billion parameters per query out of a pool of 400 billion parameters, enabling 95% higher computational efficiency compared to conventional models.

Particularly noteworthy is Llama 4’s approach to multimodal processing. Unlike previous models that process text and image data separately, Llama 4 uses an early fusion method that integrates different modalities at the input level. Using a MetaCLIP-based vision encoder and special cross-modal attention mechanisms, the model can handle complex visual speech processing tasks with remarkable accuracy.

The models have been trained with over 30 trillion tokens of multimodal data and can process up to 48 images simultaneously – a capability that is particularly relevant for applications in image analysis and document-based queries.

The Scout model also impresses with a 10 million token context window made possible by the innovative iRoPE (Interleaved Rotary Position Embedding) architecture. This technology allows the model to process large documents and capture both local and global contexts. Benchmarks show a 98% retrieval accuracy with 10 million token codebases.

Summary:

- Llama 4 utilizes a mixture-of-experts architecture that activates only 2-5% of parameters per request, increasing computational efficiency by 95%

- Native multimodal processing through early fusion enables simultaneous processing of up to 48 frames

- 10 million token context window outperforms GPT-4 by a factor of 80 and enables the analysis of large documents

- Performance comparisons show superiority over GPT-4o and Gemini 2.0 while reducing energy consumption by 40%

- First enterprise integrations at Snowflake and Cloudflare demonstrate practical use cases in document analysis and real-time image processing

Source: Meta