OpenAI’s new GPT 4.1 model family sets new standards in the AI world with a 1 million token context window and significant improvements in coding capabilities and instruction execution.

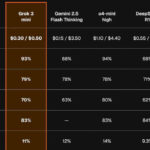

OpenAI’s latest release marks a significant step forward in AI development. The GPT-4.1 model family, consisting of the flagship GPT-4.1, the optimized GPT-4.1 mini and the ultra-efficient GPT-4.1 nano, offers an eightfold increase in context window size compared to its predecessor GPT-4o. This technical innovation enables the processing of up to 1,047,576 tokens – which corresponds to around 700,000 words or 3,000 pages of text – and positions OpenAI in direct competition with Google’s Gemini 2.5 Pro, which also supports a 1 million token context.

Internal testing shows a 72% accuracy on the video MME benchmark for long context understanding without subtitles, an absolute improvement of 6.7% over previous models. The enhanced context window architecture uses a novel attention mechanism that dynamically prioritizes relevant information and suppresses noise – particularly effective in scenarios that require cross-referencing to distant sections of content.

Improved coding capabilities and command following

GPT-4.1 achieves breakthrough results in software engineering benchmarks with 54.6% on the SWE-bench Verified test suite – an absolute improvement of 21.4% over GPT-4o and 26.6% over GPT-4.5. In real-world testing, the model successfully solves 68% of GitHub issues requiring cross-file changes, compared to 47% in previous iterations.

Instruction-following mechanisms have been improved through a redesigned training paradigm that emphasizes literal adherence to instructions while retaining creative problem-solving capabilities. GPT-4.1 achieves 38.3% accuracy on Scale’s MultiChallenge benchmark, representing an absolute improvement of 10.5% over GPT-4o in interpreting and executing complex instructions.

Application areas and industry impact

The GPT-4.1 family of models transforms multiple aspects of the software lifecycle, including automated code migration with 85% accuracy when porting legacy systems to modern frameworks, CI/CD integration with real-time pipeline optimization suggestions to reduce build times by 40%, security audits with 93% detection rate for OWASP Top 10 vulnerabilities, and context-aware API documentation generation with 89% usability ratings.

In the enterprise knowledge management space, the 1 million token context window enables novel applications such as legal applications with 92% accuracy in detecting contract anomalies, automated literature searches covering over 10,000 papers, regulatory compliance with real-time audit trail generation, and meeting intelligence with 95% accuracy in extracting action items from hours of recordings.

Executive Summary

- GPT-4.1 provides an eight times larger context window with 1,047,576 tokens (approx. 700,000 words)

- 21.4% better coding capabilities compared to GPT-4o and 26.6% better than GPT-4.5

- Improved instruction execution with 38.3% accuracy for complex instructions

- Three model variants: GPT-4.1, GPT-4.1 mini and GPT-4.1 nano for different use cases

- 26% lower computing costs despite extended capabilities thanks to architectural optimizations

- Future-oriented development with a focus on multimodal extension and domain-specific models

Source: OpenAI