DeepSeek AI’s introduction of FlashMLA, an innovative decoding kernel technology for Multi-head Latent Attention (MLA), is a significant step in the continuous optimization of AI models. This open technology was developed specifically for the NVIDIA Hopper architecture and aims to dramatically improve the processing of variable sequence lengths in AI models.

Outstanding technical specifications

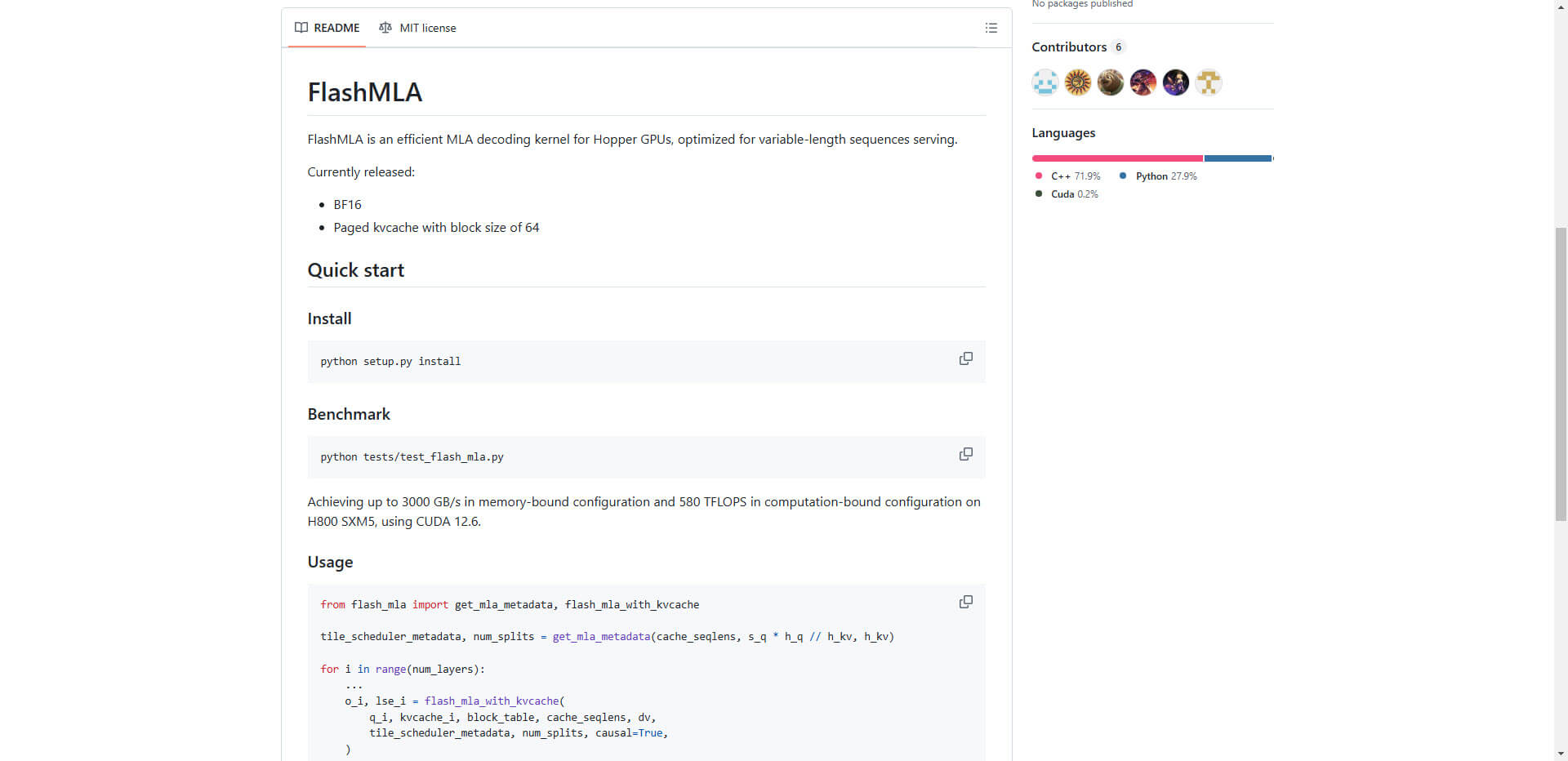

FlashMLA is characterized by its impressive technical performance, especially on GPUs of the new NVIDIA H800 SXM5 series. For memory-intensive applications, FlashMLA achieves up to 3000 GB/s memory bandwidth, while compute-intensive operations can reach 580 TFLOPS. The core features, such as support for BF16 precision and efficient paged KV caching with block sizes of 64, provide remarkable performance improvements in the processing of sequential data.

The kernel technology builds on proven approaches such as FlashAttention 2&3 and Cutlass and is optimized for production environments. Developers can easily install and test it via DeepSeek AI’s GitHub repository.

Linking hardware and software for optimized AI capabilities

By specifically optimizing for NVIDIA Hopper GPUs, FlashMLA sets new standards in the integration of hardware and software. The interplay of specialized GPU architectures and tailored software is increasingly seen as a key strategy to cope with the fast pace and complexity of modern AI applications. Projects like FlashMLA illustrate that customized tools can make the difference between marginal improvement and dramatic leaps in performance.

Open access to this technology promotes transparency and collaboration in AI research and demonstrates a growing movement towards open source development. This offers smaller teams or companies in particular the chance to compete on a level playing field with large technology providers.

Potential beyond AI

The impact of FlashMLA is not limited to pure performance. The ability to work faster and more efficiently lays the foundation for innovation in applications where real-time AI processes are critical. This includes sectors such as healthcare technology, autonomous driving and financial technologies.

In addition, the release of this technology as part of DeepSeek AI’s Open Source Week marks a commitment to ethical AI development. By democratizing high-performance tools, broader and more diverse developer communities can be addressed, encouraging the general acceptance and further development of AI software.

The most important facts about the update

- Optimized for NVIDIA Hopper GPUs: Maximum GPU performance with up to 3000 GB/s memory bandwidth and 580 TFLOPS.

- BF16 and KV caching support: Reduction of processing times for sequence operations.

- Open source on GitHub: Access for developers of all levels, promotes transparency and collaboration.

- Relevant applications: AI in real-time areas such as healthcare, finance and autonomous technologies.

- Improved hardware and software synergy: Tailored optimization for specialized hardware.

Source: GitHub