Google’s Gemini Live establishes a new dimension of AI-based interaction on Android devices with natural conversations, multimodal input and deep system integration.

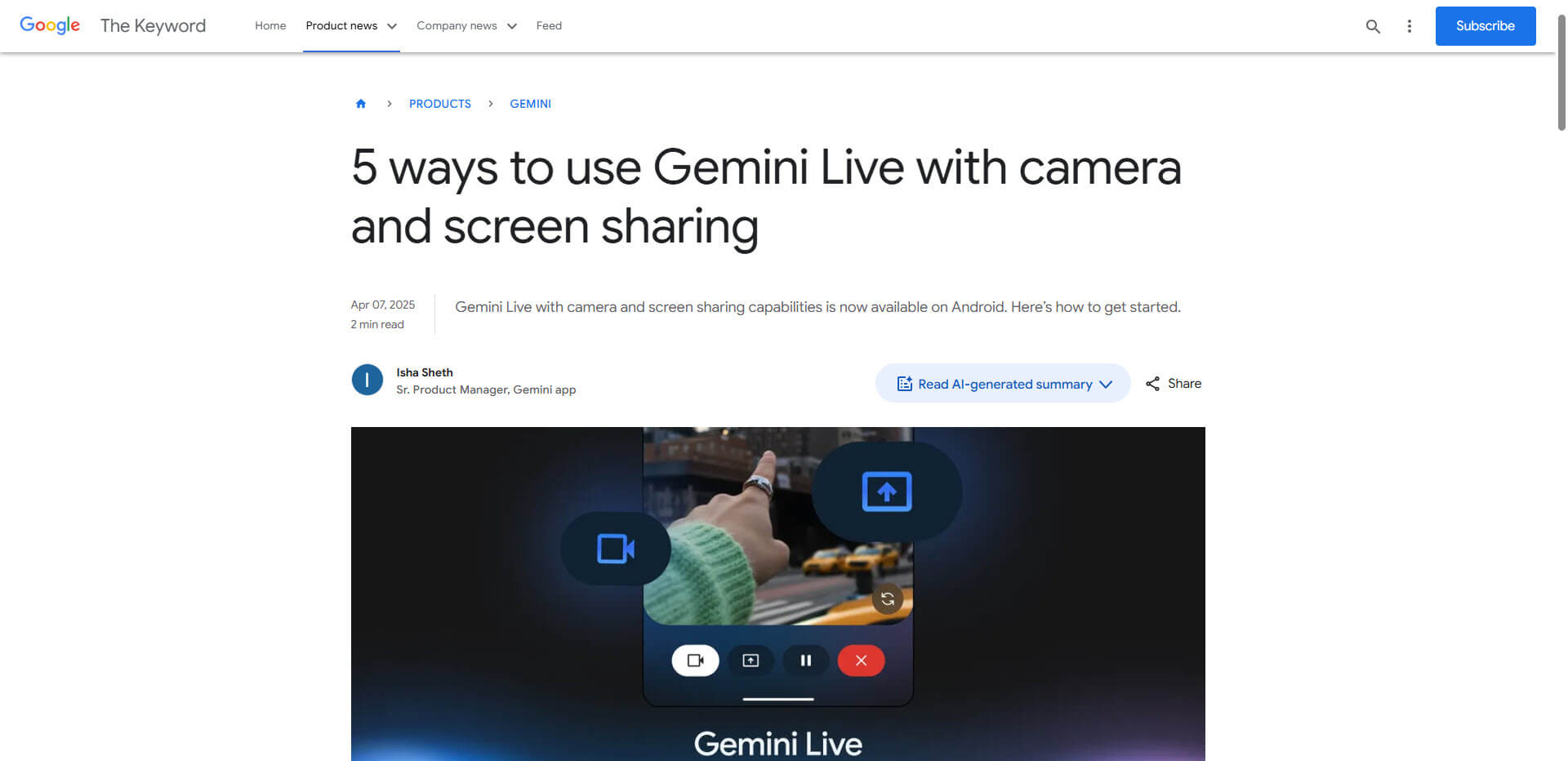

Google’s latest addition to the Gemini ecosystem, Gemini Live, transforms the way users interact with their Android devices. Unlike traditional voice assistants, this innovation enables fluid conversations with natural pauses and contextual responses. The technology not only analyzes voice commands, but also understands visual content through camera and screen sharing, which opens up completely new application scenarios.

The deep integration into the Android operating system allows Gemini Live to communicate with over 40 apps and automate complex cross-task workflows. Particularly impressive is the ability to coordinate actions across multiple applications – such as extracting recipes from emails, adding ingredients to the shopping list and playing matching music at the same time, all with a single voice command.

The first devices to exploit the full potential of Gemini Live are the Samsung Galaxy S25 series and Google Pixel 9 models. On these flagship smartphones, the AI uses dedicated Neural Processing Units for accelerated response times. Exclusive functions such as the analysis of screen content by holding down the side button or the real-time analysis of objects via the camera demonstrate the hardware-optimized implementation.

Of particular interest to creative professionals: Gemini Live acts as a digital brainstorming partner that supports designers in sketching, overcomes writer’s block and even serves as a code assistant in Android Studio. The technology recognizes contextually when it should make creative suggestions or solve technical problems. According to a recent survey, 86% of developers report a significant reduction in debugging effort thanks to context-sensitive error analysis.

Summary

- Gemini Live provides natural conversations with interruptibility and contextual understanding across multiple requests

- Technology handles multimodal input such as speech, images and screen content simultaneously

- Deep Android integration enables seamless collaboration with over 40 apps

- Special optimizations for Samsung Galaxy S25 and Google Pixel devices use dedicated NPU hardware

- Data protection is ensured through on-device processing and clear transparency indicators

- Future developments such as Project Astra will enable AR annotations and group collaboration

Source: Google Blog