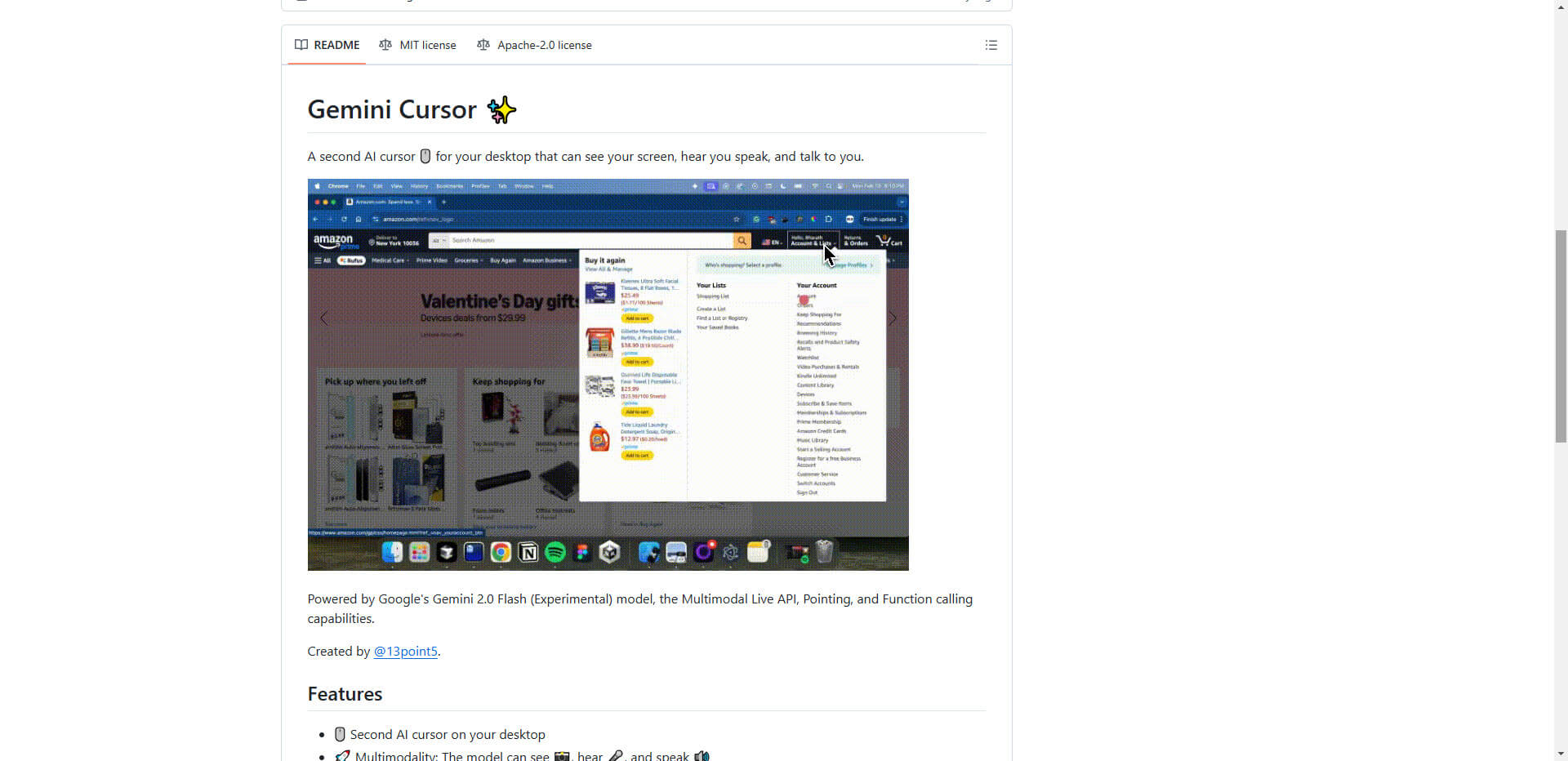

The integration of artificial intelligence into everyday processes reaches a new level with the “Gemini Cursor”. This project, published on GitHub by @13point5, uses the Google Gemini 2.0 Flash API to create an extraordinary connection between humans and technology: a second, AI-driven cursor for the desktop. The application makes it possible to analyze content in real time and support users in complex scenarios – a compelling innovation at the intersection of interactivity and efficiency.

Multimodality as the key to interaction

The Gemini Cursor is characterized by its multimodal capabilities. It can analyze visual content, respond to voice commands and communicate via both text and voice. This interaction facilitates access to digital content and creates new possibilities. Users can have complex diagrams, research documents or architectural plans provided with comprehensive explanations. Its ability to be used for practical tasks such as navigating through complex websites is particularly impressive, allowing it to effortlessly support even everyday challenges such as e-commerce processes.

This is not just a technical feature: multimodal AI systems emphasize the current industry-wide trend of pushing the boundaries of AI interaction. Models such as Google Gemini or OpenAI’s Multimodal GPT-4 combine voice, image and, increasingly, video or other sensory input to provide users with realistic solutions. The education sector in particular holds great potential here, where the Gemini Cursor can be used as a real-time tutor, among other things.

Progress through innovation: applications of the Gemini API

In addition to the Gemini Cursor, the Google Gemini API ecosystem demonstrates the extent to which multimodal AIs can be integrated into existing workflows. The example of the “Gemini Project Assistant” illustrates how AI can be integrated into programming-based applications. In the current context, Elektron, React and TypeScript technology is the basis for the software, which remains as customizable and flexible as possible.

Another technical highlight is the low latency of the Gemini Cursor, which enables almost fluid, real-time interactions. This not only enhances the user experience, but could also revolutionize many professional areas – from research and communication to agile working models in companies. Nevertheless, the question remains as to how these models will be further developed in order to operate in even more data-rich and dynamic environments.

Challenges and the way forward

Despite the impressive progress, the application of multimodal AIs also raises questions, particularly in relation to data security and user feedback. Projects such as Gemini Cursor are based on intensive data processing – including sensitive information where necessary, while real-time analysis is running. It remains of crucial relevance that security measures and transparent data protection guidelines are integrated.

Another aspect is accessibility: although users with a technical background can meet the requirements for installing and using the cursor, it is clear how important intuitive installation processes and user-friendly elements are in order to reach a broader target group. There is a chance that the future of AI-driven assistants such as Gemini Cursor in areas such as education and industry will sustainably increase the acceptance of such solutions.

The most important aspects summarized

- The Gemini Cursor works with multimodality (see, hear, speak) and enables real-time interactions with low latency.

- Main applications include graphical analysis of diagrams, website navigation support and educational tools as a real-time tutor.

- The technical system is based on state-of-the-art technologies such as Google Gemini API, Electron and React.

- Areas of application range from research and education to e-commerce assistance.

- Data protection, accessibility and user-friendliness remain key challenges.

Source: GitHub