With the recent release of Janus-Pro-7B, a state-of-the-art multimodal understanding and generation model from DeepSeek, the AI industry is entering a new phase of technological advancement. The China-based AI startup provider combines innovative architectural approaches with powerful data utilization. This sets new standards, especially in a market dominated by established players such as OpenAI or Stability AI.

Standardized architecture and flexibility in multimodality

Janus-Pro-7B is distinguished by a uniform transformer architecture that is used for both understanding and generating multimodal content. The special feature of this architecture is the decoupling of visual encoding into separate processing paths. This eliminates potential usage conflicts in image generation tasks. In particular, the integration of SigLIP-L as a vision encoder and the use of a tokenizer with a downsampling factor of 16 enables image data to be processed with high quality and speed.

This structured approach not only gives the model more flexibility and precision, but also sets Janus-Pro-7B apart from previous AI-based approaches, which often had to use separate systems to achieve similar results. It thus demonstrates innovative solutions for the increasingly complex requirements of AI research.

Impressive performance and practical applications

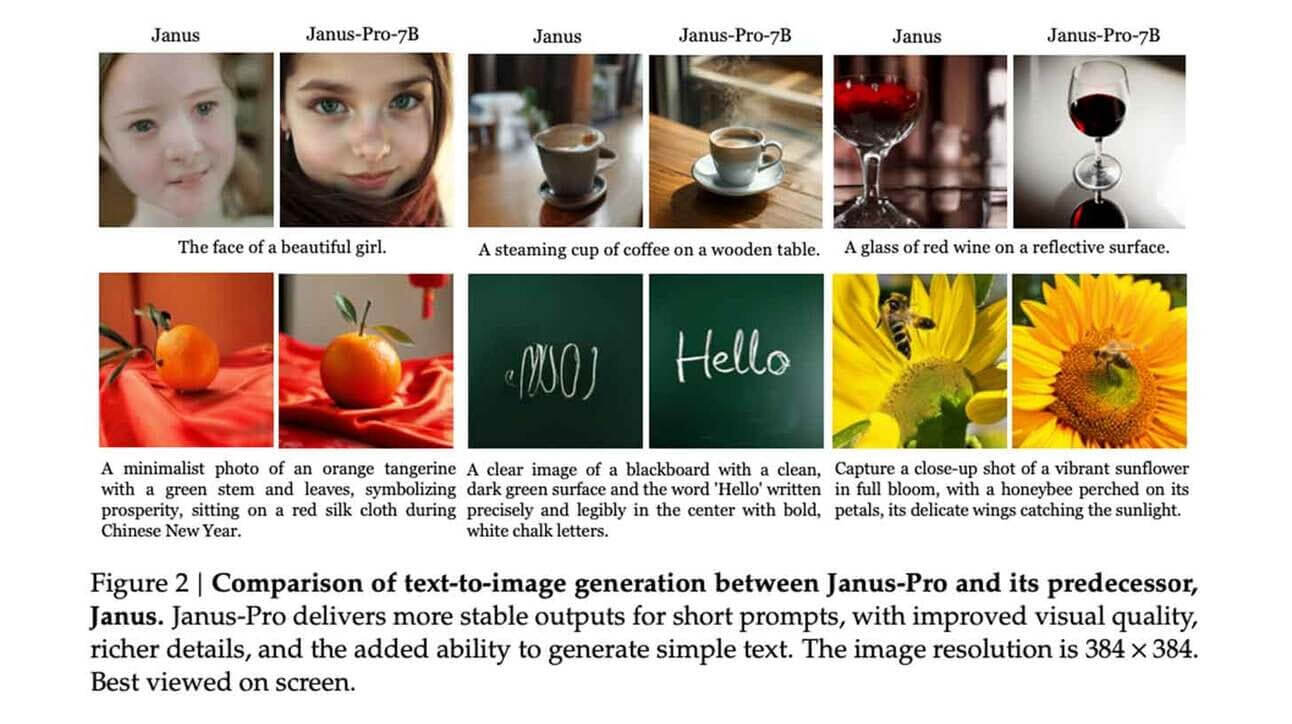

The performance of Janus-Pro-7B is particularly evident in its versatility. Whether for image description, text recognition (OCR), visual question answering or more complex tasks such as landmark recognition and text-to-image generation, the model sets new standards. Especially in comparison to other state-of-the-art applications such as OpenAI’s DALL-E 3, it positions itself with superior image synthesis performance.

The quality is based on a combined dataset of 72 million synthetic and real image data, which comprehensively trains the model. With a model size of 7 billion parameters, Janus-Pro-7B not only meets the requirements for computing power, but also achieves optimized complexity to solve tasks even faster and more efficiently. For tech-savvy professionals, the model is accessible on Hugging Face as well as on GitHub, provided under the MIT license – which greatly facilitates its integration into professional demonstrations and commercial projects.

New perspectives for start-ups and the AI industry

The release of Janus-Pro-7B clearly shows that innovative progress is not just the preserve of a handful of large companies. Start-ups such as DeepSeek impressively demonstrate how the strong focus on research, coupled with the targeted use of data and technology, is challenging the established players. The trend towards licensing as open source initiatives (under the MIT license and the DeepSeek Model License system) could prove to be a catalyst for future collaborations and multidisciplinary innovation.

Particularly important for the industry is the growing multimodal potential of such technologies, which create a basis for expanding the design and analytical capabilities of AI in the real economy. The focus here is not only on technological enhancements, but also on ethical, legal and usability issues that need to be taken into account during further development.

The most important facts about Janus-Pro-7B

- Model architecture: Autoregressive design with decoupled visual encoding and uniform transformer architecture.

- Performance: Outperforms market-leading solutions in image synthesis benchmarks and realizes excellent results in multiple multimodal tasks.

- Training: Trained on 72 million high quality image data; 7 billion parameters.

- Application areas: From Visual Question Answering to text-to-image generation.

- Accessibility: Available on Hugging Face and GitHub with detailed setup instructions. Here is the link to the GitHub repository: Janus

- Market Impact: Strong positioning for startups, signaling impact for future innovation in AI.

Source: Hugging Face