Sakana AI’s new Continuous Thought Machine (CTM) represents a radical paradigm shift in AI development by establishing neural synchronization as the core mechanism for machine thinking.

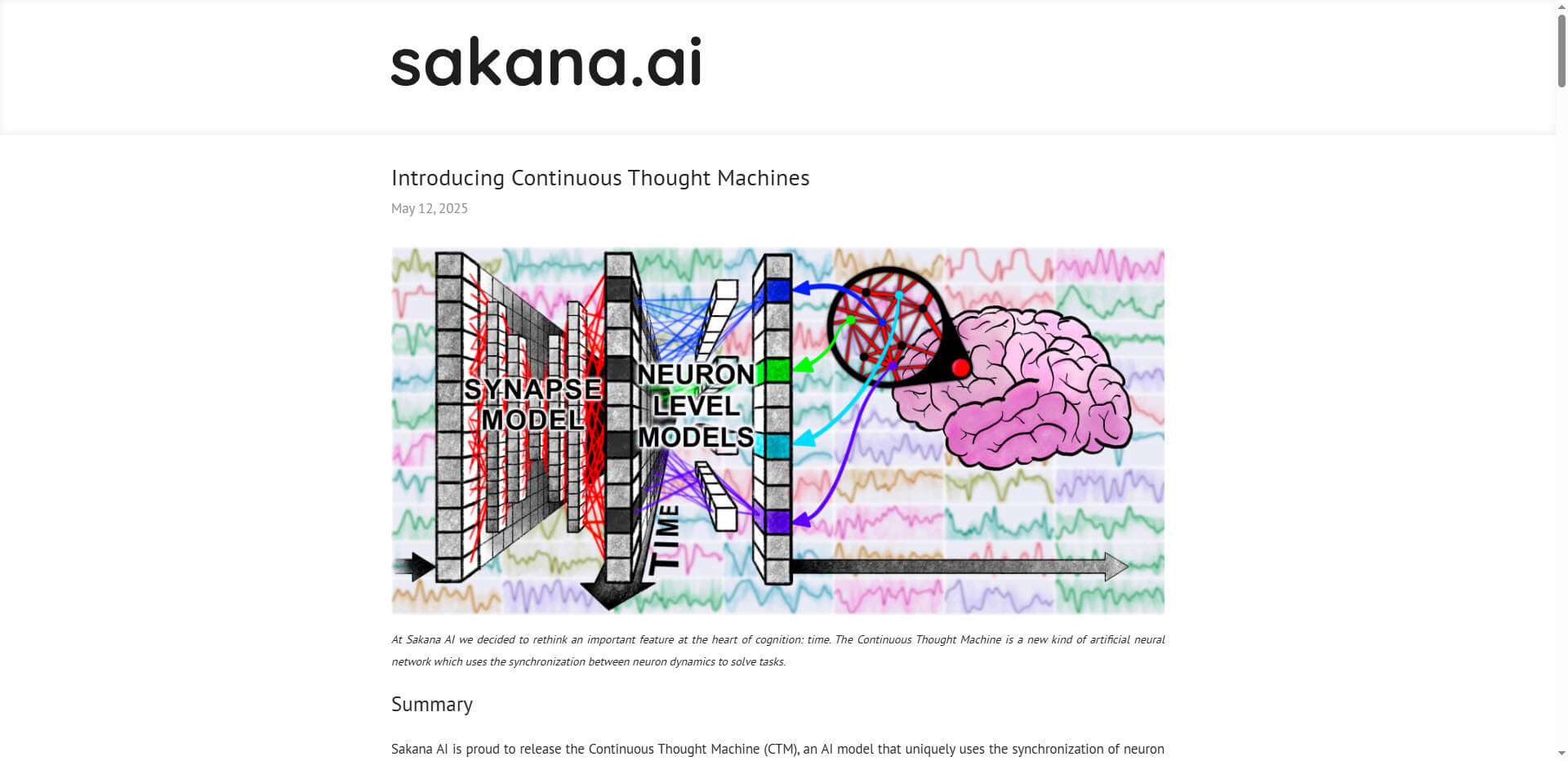

Biological brains use precise neural timing and synchronization for complex cognitive tasks. Traditional AI models have abstracted these temporal dynamics in favor of computational efficiency. CTM challenges this approach by introducing a neuron-specific temporal processing system, where each neuron processes a unique history of incoming signals with its own weight parameters. This system enables a stepwise, human-like way of thinking and improves both performance and interpretability.

Unlike conventional neural networks, CTM operates along a self-generated timeline of internal reasoning steps that are decoupled from the input sequence length. This enables the processing of static data through iterative refinement and adaptive computational depth – the model dynamically adapts its computational intensity to the complexity of the task.

Impressive performance in complex problems

In comparative experiments, the CTM shows clear advantages over conventional architectures. In parity tasks with a length of 64, the CTM achieves 100% accuracy with over 75 clocks, while LSTMs get stuck at less than 60% accuracy with a maximum of 10 effective clocks. For maze navigation, CTM achieves an 80% success rate, compared to 45% for LSTMs and only 20% for feed-forward networks.

Image classification is particularly noteworthy: on ImageNet-1K, CTM achieves 75.2% top-1 accuracy with 512 internal clocks, comparable to ResNet-18 (69.8%), but with significantly better calibration. Neuronal activity shows task-dependent oscillations and traveling waves similar to biological visual processing.

The internal dynamics of CTM enable unprecedented transparency. During image processing, attention heads systematically scan relevant features. On CIFAR-10H, the CTM achieves a calibration error of only 0.15, outperforming both humans (0.22) and LSTMs (0.28).

Bridging the gap between neuroscience and machine learning

CTM demonstrates that biologically inspired temporal processing can lead to practical AI advances. It implements memory without recurrence – synchronized neural states store information beyond immediate activations and enable memory-like behavior without explicit memory cells. It also offers natural adaptive computation, where the model intrinsically modulates the depth of computation based on problem complexity.

However, challenges remain: Each internal clock cycle requires full forward passes, which increases training costs by about three times compared to LSTMs. Current implementations process a maximum of 1,000 neurons, and scaling to transformer size (≥1 billion parameters) is not yet proven.

Summary

- The Continuous Thought Machine uses neural synchronization as a fundamental mechanism for machine thinking

- CTM enables step-by-step problem solving similar to human thinking and increases performance and interpretability

- In benchmark tests, the system outperforms traditional architectures on parity tasks, maze navigation and image classification

- Neural activity shows biologically plausible patterns such as task-dependent oscillations and traveling waves

- The system provides exceptional transparency through interpretable attention traces and precise confidence estimates

- Despite higher computational costs, the model shows a promising path for next-generation biologically inspired AI systems

Source: Sakana