Groundbreaking AI technology gives people with severe paralysis their voice back – with a delay of just 100 milliseconds.

A research team from the Universities of California, Berkeley and San Francisco (UCSF) has developed a neuroprosthesis that converts brain signals into natural-sounding speech. This innovation represents a significant advance in the field of brain-computer interfaces. The new technology enables people with severe paralysis to speak in near real time by transforming their neural activity directly into audible speech.

Unlike previous systems that first generated text and later converted it into speech, this neuroprosthesis uses AI algorithms that continuously process brain signals and convert them into speech with a minimal delay of just 50-100 milliseconds. This corresponds to the reaction speed of commercial voice assistants such as Alexa or Siri.

The personalizable technology can even mimic the user’s voice before the disease. One participant with amyotrophic lateral sclerosis (ALS) reported that he was “happy” to hear his synthesized voice. Particularly impressive: the system also decodes paralinguistic features such as pitch, intonation and volume, enabling users to emphasize words, ask questions and even sing melodies.

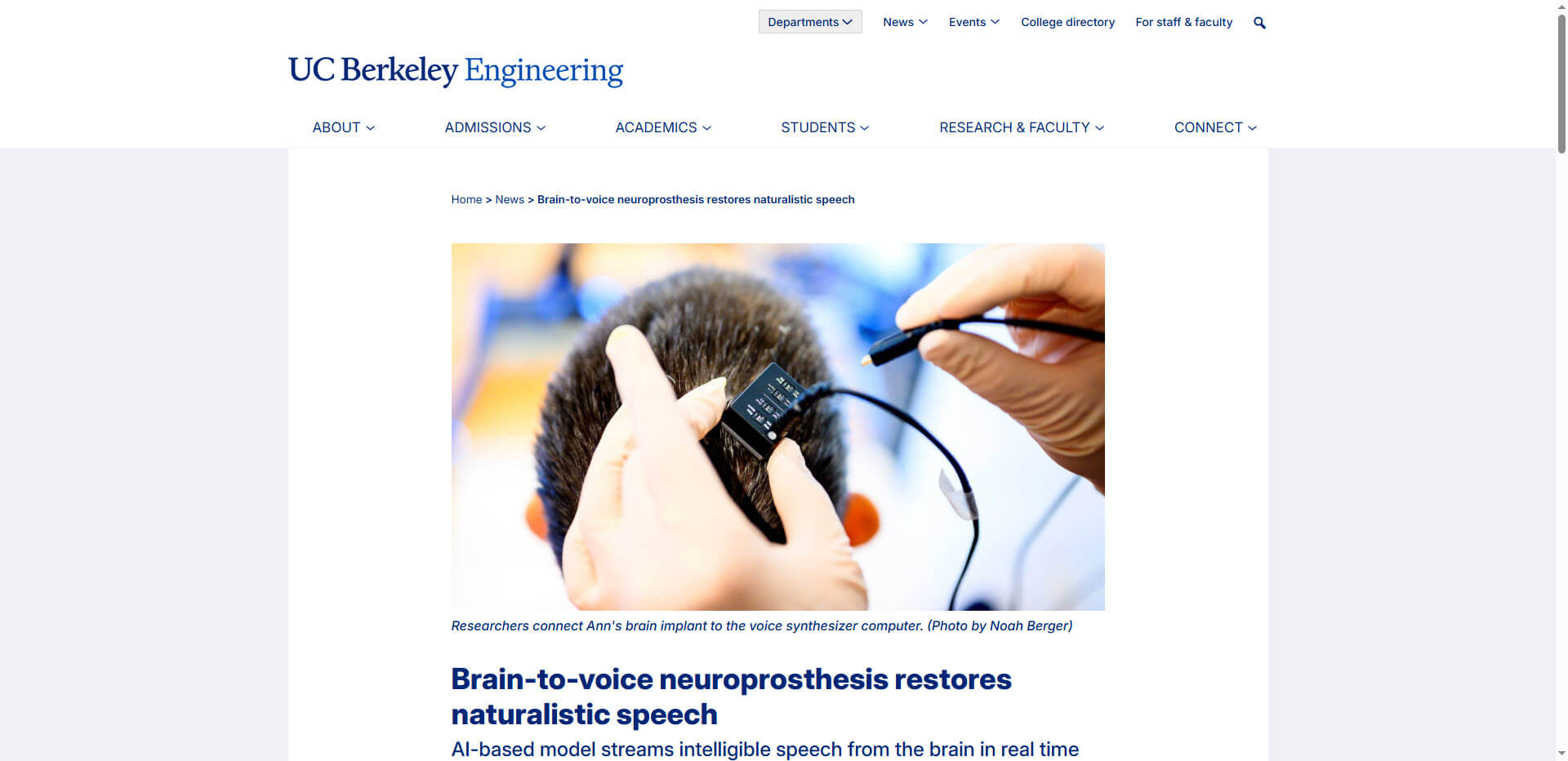

The clinical results are promising. One participant named Ann was able to increase her communication speed from 2.6 words per minute with eye-tracking devices to near-natural speech rates. Another participant, T15, achieved a 0.90 correlation in synthesized speech accuracy and was able to spell words in real time.

The most important facts about the neuroprosthesis:

- Minimal latency: 50-100 milliseconds from speech attempt to audible output

- Multimodal compatibility: Works with different types of electrodes (ECoG, MEAs, sEMG)

- Personalization: Can mimic the user’s original voice

- Expressiveness: Enables intonation, questions and even singing

- Significant improvement: From a few words per minute to almost natural speaking speeds

- Future developments: Improvement of emotional expressiveness, expansion of vocabulary, miniaturization of hardware

Source: Engineering Berkeley