With its concept of the “Open Agentic Web”, Microsoft is redefining the future of digital interaction and placing autonomous AI systems at the center of technological development.

AI Technology

Microsoft’s ADeLe framework revolutionizes AI evaluation with 88% prediction accuracy

The future of AI evaluation has been fundamentally changed by Microsoft’s new ADeLe framework, which not only provides 88% accurate performance predictions for new tasks, but also explains why models fail or succeed.

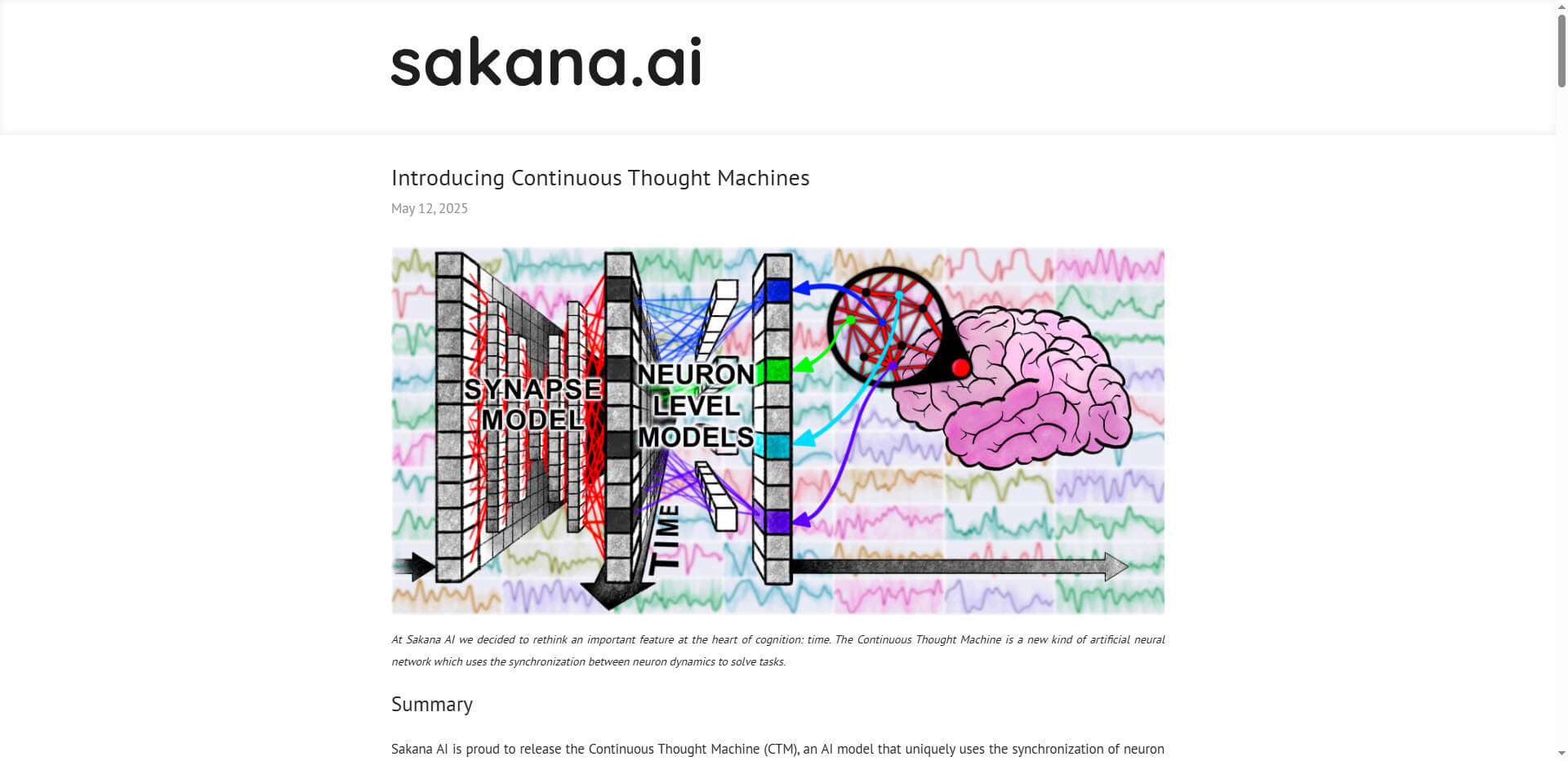

Continuous Thought Machine: How Sakana AI integrates biological thinking into AI systems

Sakana AI’s new Continuous Thought Machine (CTM) represents a radical paradigm shift in AI development by establishing neural synchronization as the core mechanism for machine thinking.

Microsoft implements Google’s A2A protocol: AI agents are networked in a standardized way for the first time

In a significant development for AI ecosystems, Microsoft has announced the implementation of Google’s Agent2Agent (A2A) protocol. This strategic move enables the integration of Azure AI Foundry and Copilot Studio with external AI agents – including those based on non-Microsoft platforms.

Phare benchmark revealed: Leading AI models deliver wrong information 30% of the time

The latest study by Giskard in collaboration with Google DeepMind shows that leading language models such as GPT-4, Claude and Llama invent facts that sound convincing but are not true up to 30% of the time. These AI hallucinations pose a growing risk to businesses and end users, especially when the models are instructed to give short, concise answers.

ZeroSearch from Alibaba: 88% cost reduction in AI training with superior performance

With ZeroSearch, Alibaba has developed an innovative AI training method that reduces training costs by an impressive 88% and even outperforms Google Search in tests.