The new Ironwood TPU (7th generation) from Google revolutionizes AI infrastructure with exceptional performance and efficiency. This hardware innovation addresses the growing requirements of complex AI models and offers significant advantages for your development and inference processes.

- Drastically improved energy efficiency not only enables a lower carbon footprint, but also leads to significant cost savings in training and inferencing large models.

- Faster training cycles reduce the time from weeks to days, allowing you to experiment more frequently and drastically reduce your time-to-market on AI projects.

- Specialized architecture optimizes matrix multiplications and fixes the memory bandwidth bottle neck, which significantly speeds up the processing of multimodal data (text, image, video).

- Exclusively availablein the Google Cloud Platform, Ironwood offers excellent integration with JAX and TensorFlow, but requires consideration of a potential vendor lock-in.

- Democratization of training through lower financial barriers to entry also enables smaller companies to train their own foundation models, which was previously reserved for tech giants.

This technology forms the foundation for the next generation of AI applications – from autonomous agents to real-time video generation and complex AI systems that can be seamlessly integrated into business processes.

Would you like to finally know how to scale AI projects not only faster but also more sustainably? Then stay tuned – because with Ironwood, Google is launching a new generation of Tensor Processing Units (TPU v7) that aims to do just that.

In practice, it looks like this: Your model training sometimes takes forever, cloud costs shoot through the roof – and you know this is not a scalable state. Ironwood promises a real change of direction. No more: “Upgrade after upgrade, but the bottleneck remains.”

This is where real efficiency comes into play, without you having to wade through pages of white papers.

What can you expect in the article?

We’ll show you:

- Why Ironwood noticeably speeds up your workflow in training and inference

- How energy efficiency saves your costs (and nerves!)

- When and for whom the switch to Ironwood really makes sense – without concealing vendor lock-in traps

We don’t stick to buzzwords, but give you practical insights, calculation examples and mini FAQs. Whether you’re a CTO, product owner or data scientist – here you’ll get plain language that has a direct impact on your everyday tech life.

Copy & paste prompt? Coming later!

For now, we’ll give you the overview you need to get off to a flying start with Ironwood.

Ready to experience the end of computing brakes? Then let’s find out together how Ironwood can take your next AI project to the next level!

Ironwood TPU: The new generation of AI infrastructure is here

Google has just reshuffled the deck. The Ironwood TPU, the seventh generation of its Tensor Processing Units, sets the bar for hardware performance extremely high. This is not just a minor version update – this is the fuel we desperately need for the next wave of Generative AI.

You probably know the pain point from your own projects: Our models are becoming exponentially more complex, parameter numbers are exploding and computing capacity is reaching its physical limits.

The end of the line? Not with this hardware.

We are facing a massive infrastructure problem. The hunger for computing power for training and inference not only eats up budgets, but also drives energy consumption to unsustainable levels. If your latency times go up, the user experience suffers. If electricity costs explode, your business case dies.

This is exactly where Google comes in with Ironwood. This chip was not developed in a vacuum, but as a direct response to the scalable workloads you run in the cloud today.

What Ironwood means for you

Ironwood is more than just silicon; it’s a promise for the efficiency of your pipeline. According to Google, the focus is massively on better energy efficiency with higher performance per watt.

For you as a developer or tech lead, this means:

- Faster iterations: When training drops from days to hours, you can experiment more often and get your models to market faster.

- Sustainable scaling: You can deploy larger models without your carbon footprint (and cloud bill) going through the roof.

- Optimized inference: Your applications respond faster. In a world where real-time interaction with LLMs is becoming the standard, latency is the new currency.

We are moving away from “brute force” to intelligent, specialized hardware. Ironwood is the foundation on which you build the AI tools of tomorrow. Get ready to adapt your workflows – the hardware is now ready for the next step.

Ironwood TPU in detail: Technical specifications and innovations

No more waiting for training. Google is stepping up and launching the 7th generation of its Tensor Processing Units (TPU) with Ironwood. If you thought Trillium (6th generation) was the end of the line, think again. Ironwood isn’t just an iterative update – it’s a massive leap for your infrastructure performance.

The foundation of this chip is Google’s consistent custom silicon approach. Unlike generic GPUs, which are used for both graphics and AI, Ironwood is tailor-made for the matrix multiplications of modern Transformer models. Hardware and software interlock perfectly here.

What makes Ironwood better than Trillium

A direct comparison with the previous generation shows where Google has made the leverage. For you as an ML engineer, this means in concrete terms:

- Massive memory bandwidth: The most common bottleneck with large LLMs is not computing power, but data transfer. Ironwood offers significantly faster High Bandwidth Memory (HBM) so that your GPUs don’t have to wait for data (“memory wall”).

- Optimized interconnects: The latency between the chips in the pod has been reduced. This allows you to scale Distributed Training even more linearly across thousands of chips.

- Specialized sparsity cores: The architecture has been adapted to calculate sparse models more efficiently. This means you can skip unnecessary parameters in the network without losing performance.

Efficiency: More power, less overhead

This is where it gets economically interesting. Today, hardly any company can afford performance at any price. Ironwood sets new standards in energy efficiency (performance per watt).

Why is this important to you?

- Reduction of operating costs (OpEx): If you run inference on a large scale, every watt directly impacts your cloud bill. Ironwood delivers more throughput with the same amount of energy.

- Sustainability: AI training is regularly criticized for its carbon footprint. With the 7th generation, you can train more complex models without exploding your ecological footprint.

Ironwood is the answer to the question of how we can compute the next wave of multimodal models. Get ready to adapt your pipelines to this new power.

Ironwood vs. the competition: how Google stacks up against NVIDIA and AMD

The market for AI accelerators is no longer a pure NVIDIA monopoly. With Ironwood (TPU v7), Google is throwing a real heavyweight into the ring that challenges the dominance of the H100 and H200 GPUs not through brute force, but through intelligent architecture. For you as a developer or architect, this means that you finally have valid alternatives to optimize your training and inference stack.

Specialization vs. all-rounder

While NVIDIA’s Hopper architecture (H100/H200) is the undisputed generalist for practically any CUDA-based application, Ironwood plays to its strengths in a specific niche: Massive Matrix operations in Google’s own ecosystem.

- Training: Ironwood was developed to scale huge Transformer models more efficiently. Thanks to optical interconnects (OCI), thousands of chips can be networked to form a super-pod. This drastically reduces latencies and accelerates convergence for large LLMs.

- Inference: This is where the chip shines with its enormous energy efficiency. Google has designed Ironwood in such a way that it generates more tokens per watt than comparable GPUs. This massively reduces your ongoing operating costs as soon as your model goes live.

Cost efficiency and ecosystem

Possibly the biggest lever for your budget: price-performance. NVIDIA instances are regularly extremely expensive and scarce. Google is positioning Ironwood in the Google Cloud Platform (GCP) as a more cost-effective alternative for workloads that run on frameworks such as JAX or TensorFlow.

But beware: you are tied to the Google ecosystem here. Unlike AMD’s MI300X, which is increasingly positioning itself as an open drop-in replacement for NVIDIA, Ironwood is an exclusive cloud product. In return, however, you get a vertically integrated solution in which hardware and compiler(XLA) are perfectly matched.

The direct comparison

Here you can see at a glance where Ironwood stands in comparison to the top dogs:

| Feature | Google Ironwood (TPU v7) | NVIDIA H100 / H200 | AMD Instinct MI300X |

| Primary focus | Efficiency & scaling (training/inference) | Maximum performance & flexibility | Memory bandwidth & price-performance |

| Software stack | Optimized for JAX / TensorFlow (PyTorch via XLA) | CUDA (industry standard, maximum compatibility) | ROCm (open source, catching up fast) |

| Networking | Proprietary optical circuit switches (extremely scalable) | NVLink / InfiniBand | Infinity Fabric |

| Availability | Exclusively via Google Cloud (GCP) | Everywhere (cloud, on-prem), but regular delivery bottlenecks | Cloud (Azure/Oracle) & on-prem |

| Rock star factor | 🚀 Best choice for pure cloud-native & JAX users | 👑 The standard for maximum flexibility | 🛠 The price-performance challenger |

Conclusion for you: If your stack is already on Google Cloud and you’re ready to move to JAX or optimized PyTorch, Ironwood is regularly the more economical and scalable choice over expensive NVIDIA hardware.

Practical application: Use the Ironwood TPU in Google Cloud

Enough theory, let’s take a look at how you can put this computing power on the road. Ironwood is not just a technical upgrade, but a real game changer for your development workflow. If you’re ready to massively reduce your training times, here’s your roadmap.

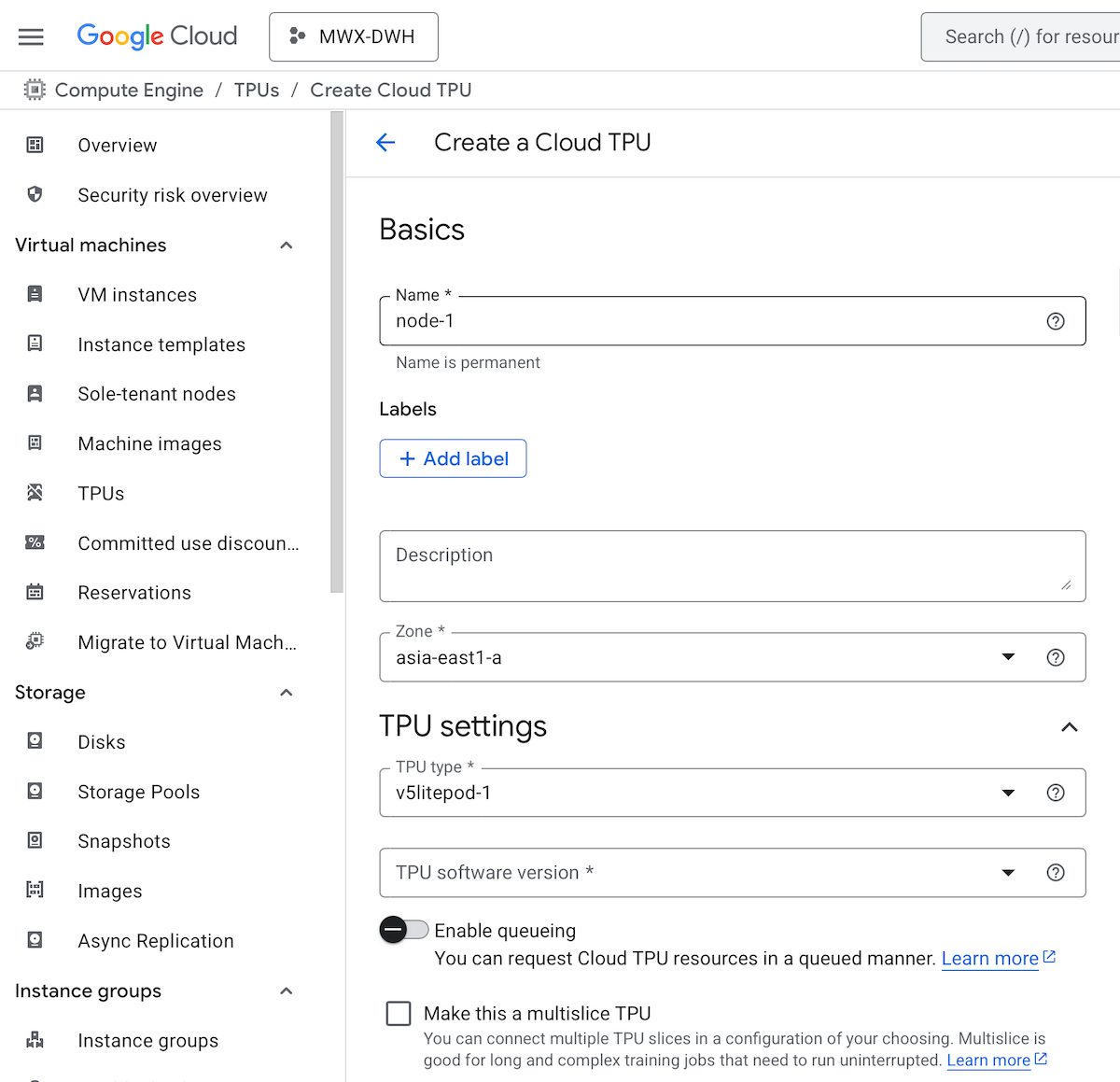

The access: Into the Google Cloud

No need to buy hardware or cool server rooms. Ironwood is available to you directly via the Google Cloud Platform (GCP). Access is typically via TPU nodes or TPU VM architectures.

- Setup: Start your instance via the

gcloud CLIor the console. Make sure to directly select the new tpu-v7-ironwood (or corresponding codename flags) zones.- Starte deine Instanz über die

gcloud-CLI oder die Konsole. - Use a supported zone for the tpu-v7-ironwood : https://docs.cloud.google.com/tpu/docs/regions-zones

- Activate the Cloud-TPU-API: https://console.cloud.google.com/apis/library/tpu.googleapis.com

- Go to Compute Engine > TPU and create a new TPU engine: https://console.cloud.google.com/compute/tpus/

- Starte deine Instanz über die

- First steps: Use preconfigured deep learning images to avoid driver conflicts. You want to code, not debug.

How to create a TPU in Google Cloud (HuggingFace): https://huggingface.co/docs/optimum-tpu/en/tutorials/tpu_setup

Workloads: When is Ironwood your tool?

Not every task needs a sledgehammer. Ironwood is particularly useful for massive parallel computing.

- Training LLMs: When you train transformer models with billions of parameters.

- Large-Scale Inference: When latency is your nemesis and you need real-time answers for complex GenAI models.

- Scientific simulations: Wherever matrix multiplications dominate.

Migration and code: JAX & TensorFlow

The days when TPU code had to be completely rewritten are over. Google has massively optimized the software stacks.

- JAX is king: Ironwood and JAX are made for each other. Performance scaling across multiple pods is most seamless here.

- TensorFlow & PyTorch: Thanks to the XLA (Accelerated Linear Algebra) compiler, your existing graphs are efficiently mapped to the TPU architecture.

- Best practice: Use high-level libraries such as Flax or Keras. They abstract the hardware distribution so that you can concentrate on the model architecture.

The ROI check: Is the change worth it?

Here you need to think strategically. The hourly price for Ironwood may seem higher than standard GPUs, but the metric that matters is “time-to-convergence”.

- If you finish training your model in 2 days instead of 2 weeks, you not only save on compute costs, but gain valuable time-to-market.

- For small experimental projects, stick to smaller instances. But as soon as you go into production or fine-tuning large models, the efficiency of Ironwood pays for itself almost immediately.

Impact on AI development: training and inference rethought

Let’s talk turkey: Hardware isn’t just “infrastructure”, it’s the limiter of your creativity. If your model training takes weeks, you not only lose time, you also lose your innovative edge. Ironwood is a massive game changer, especially when you’re working with huge amounts of parameters.

The most obvious advantage is the massive speed boost for Large Language Models (LLMs) and complex multimodal systems. We are not talking about incremental improvements here. Ironwood has been specifically optimized to more efficiently process the matrix multiplications that are at the heart of any neural network.

This has direct consequences for your workflow:

- Faster iteration cycles: you can validate (or discard) experimental architectures faster.

- Multimodal power: The simultaneous processing of text, image and video finally becomes fluid thanks to the increased memory throughput.

- Scalability: You can run larger batches without a drop in performance.

Of course, Google uses this power itself first. Ironwood is the engine behind the latest iterations of Gemini. What this means for you: If you use Google models via the API, you indirectly benefit from decreasing latencies and more precise outputs, as Google enables its models to be retrained faster and more often on this hardware.

But perhaps the most exciting point is the democratization of training. The extreme increase in energy efficiency per computing operation reduces the effective cost of training your own foundation models. A few years ago, training a viable LLM was the preserve of tech giants. With more efficient TPUs like Ironwood, the financial barrier to entry for start-ups and medium-sized companies is falling dramatically.

Finally, this opens up new horizons for inference. While training runs regularly in batch mode, inference must happen in real time. Ironwood makes it possible to integrate significantly more complex models into real-time applications without the end user experiencing lag. This is key for the next wave of AI apps, which will not only function in the data center, but as a backend for edge cases and decentralized services. So your next AI agent isn’t just getting smarter, it’s getting damn fast.

Strategic classification: opportunities, limits and market positioning

Ironwood is a technical beast, no question. But as Lead Tech Editor, I’ll tell you: hardware is only as good as the strategy behind it. Before you throw your entire training budget into the new TPU generation, we need to talk about vendor lock-in.

TPUs are highly specialized. If you align your models and pipelines – regularly using JAX or highly optimized TensorFlow – to the Ironwood architecture, you lock yourself exclusively to the Google Cloud Platform (GCP). A later switch to AWS or Azure? That will be painful and expensive, as you will have to refactor your code to run efficiently on NVIDIA GPUs again.

The chip war and availability

We are in the middle of a massive arms race. Ironwood is Google’s frontal assault on NVIDIA’s H100 and the upcoming Blackwell chip. For you as a user, this “chip war” has one huge advantage: competition drives down the price of inference and training in the long run.

But beware: high-end hardware is rare.

- Availability: Expect initial waiting times. The largest clusters are regularly reserved for strategic Google partners or internal projects (such as Gemini updates).

- Roadmap: Ironwood is not the end of the road. The cycles are getting shorter. Google is already optimizing the successors to address the architecture of Large Language Models (LLMs) even more specifically. Your infrastructure must remain agile.

Investment recommendation: For whom is it worthwhile?

Not everyone needs the power (and shackles) of Ironwood. Here is my assessment for your company:

- Startups & Scale-ups: if flexibility is your currency, stick with GPU-based clusters for now or go with containers that are portable. Vendor lock-in can be deadly for young companies if Google raises prices. Only use Ironwood selectively for massive training jobs if the cost efficiency beats the migration effort.

- Enterprises: Is your data already in the Google Data Lake anyway? Do you train your own foundation models? Then Ironwood is a no-brainer. Vertical integration into the GCP services (Vertex AI, GKE) saves you a huge amount of time and engineering resources. This is where performance beats flexibility.

Don’t opt for the hype, but for the workflow that suits your scaling.

The infrastructure of the AI future is taking shape

Forget incremental updates. With the 7th generation of its TPUs – codenamed Ironwood – Google is clearly showing where the hammer hangs. We’re no longer just talking about a little more speed. We’re talking about the fundamental hardware architecture needed to make the wildest AI dreams of the next few years computable in the first place.

Ironwood is the engine that hums under the hood while we play with ChatGPT or Gemini on the surface. The key learnings you need to take away:

- Massive efficiency: more processing power per watt means not only a greener bottom line, but tangible cost savings when training huge models.

- Memory bandwidth: The bottleneck with modern LLMs is regularly not the computing power, but how fast data can be moved. Ironwood releases this brake.

- Scaling: These chips are built to work in clusters (“pods”) that act like a single supercomputer.

What does that mean for you in concrete terms?

This is where the wheat is separated from the chaff. Depending on your role, you have to act now:

1. For ML Engineers & Data Scientists: if you’ve been fixated solely on GPUs, now is the time to make the switch. The integration of TPUs into frameworks like JAX and PyTorch (via XLA) is smoother than ever. Test your workflows on the Google Cloud. You could push training times from weeks to days.

2.For CTOs & Product Managers: Hardware is no longer a boring infrastructure topic, but a real competitive advantage. With the more efficient inference that Ironwood enables, you can bring more complex models (e.g. multimodal agents) live without blowing up your unit economics. Do the math!

The outlook: The next wave of innovation

Ironwood is the fuel for what’s to come. We are moving away from pure chatbots towards autonomous agents and video generation, which are real computing monsters. This hardware makes it possible to apply AI not just as an isolated tool, but as a real-time layer over entire business processes.

The infrastructure is ready. Now it’s up to you to build the software that puts this power on the road. Let’s rock this.

Ironwood makes your AI projects realistically scalable

The hardware revolution is here, and Ironwood TPU v7 is the proof: AI training and inference have never been so efficient and cost-effective at the same time. Google hasn’t just built a new chip – they’ve rewritten the rules of the game for your next generation of AI applications.

These are three game-changers you need to have on your radar:

- Energy efficiency becomes your budget lever: Ironwood delivers more performance per watt. This means lower cloud costs for more complex models

- Memory bandwidth solves the memory wall: Your LLMs are no longer waiting for data, but are continuously computing

- Scaling across thousands of chips: Multi-pod training makes Foundation Models affordable even for medium-sized companies

Your next steps – ready to implement today:

Start small, but start now. Test your critical workloads on Google Cloud with TPU v7 instances. Compare your current GPU-based training times with Ironwood performance. You’ll be surprised.

Revise your ML pipeline for JAX or optimized PyTorch. The migration is less complex than expected, but the performance boost is massive.

Recalculate your TCO (Total Cost of Ownership). Ironwood may seem more expensive per hour, but shorter training times and more efficient inference fundamentally change your economics.

The AI infrastructure of tomorrow is ready today. While others are still philosophizing about hardware, you are already building the applications that will be possible with it.