This article provides a detailed insight into the cost structure of leading AI models and shows how you can save significant costs through strategic model selection and prompt optimization without sacrificing quality.

- Tokens are the basis for calculating all AI costs and, in German, follow the rule of thumb: one token corresponds to around 0.75 words, with complex or rare terms consuming more tokens than everyday language.

- Output tokens cost significantly more than input tokens for all providers, for example five times as much for Claude Sonnet (3 euros input vs. 15 euros output per million tokens), which significantly increases the overall costs for text-generating tasks.

- Gemini offers dynamic pricing, whereby the price doubles as soon as the prompt exceeds 200,000 tokens – this cost trap can be avoided by prompt chunking (splitting into smaller text blocks).

- The price differences between models are enormous: for 100,000 monthly product descriptions, you pay just 9 euros with Gemini Flash, while Claude Sonnet costs 427.50 euros for the same task – a factor of 47.

- Hybrid model strategies maximize cost efficiency: Use inexpensive models such as Gemini Flash for simple tasks and premium models such as Claude Opus only for complex requirements to save up to 80 percent of the costs.

- Legal aspects cause hidden costs due to GDPR compliance, lack of copyright protection for AI-generated content and liability issues, which must be taken into account in the overall cost calculation.

The right AI strategy starts with an understanding of tokenomics – those who optimize here can save hundreds of euros per month and still deliver quality work.

One million tokens sounds like a lot – but how much AI do you really get for that? The answer will determine your marketing budget in 2025.

Did you know that the price difference between Gemini Flash and Claude Sonnet is up to 47 times? What may seem like a trivial calculation factor at first glance can quickly eat into your monthly budget – or save you hundreds of euros.

Tokens = money: If you don’t understand how GPT-4, Claude or Gemini make their prices tick, you are guaranteed to pay too much. Tokens are the clock generator of your AI costs – and the biggest lever for your profit.

In this article, we finally crack the black box in a practical way:

- How much will AI tokens really cost in 2025 – in euros, not abstract figures?

- Why do providers charge different prices per token for your questions and answers?

- Which model suits which use case – and when is it worth switching?

- Where are the hidden costs, the budget traps for marketers & product owners?

You will find out how you can use a few targeted tricks to

- reduce your token costs by up to 90

- always make the smartest model choice for suitable tasks

- Get a complete handle on risks from price jumps or limit overruns

💡Tip: Don’t be fooled by list prices – targeted prompt design and the right batch process will save you money. You can find a concise table and mini FAQ below!

Whether you’re a CEO, growth hacker or techie: after this deep dive, you’ll be able to calculate live, readjust your AI strategy – and finally make time for real creativity again.

Let’s jump into the world of AI tokenomics and decode what really matters in 2025.

What are tokens and why do they determine AI costs?

Tokens are the currency of the AI world – they directly determine how much you pay for each ChatGPT, Claude or Gemini call. If you don’t understand tokens, you’re giving away money.

Token basics in 60 seconds

A token is the smallest unit of text that AI models can understand. A token does not always correspond to a word or character:

- “Hello” = 1 token (common German word)

- “Zusammengehörigkeitsgefühl” = 4 tokens (rare, long compound word)

- “KI” = 1 token (well-known abbreviation)

- “Artificial intelligence” = 3 tokens

Rule of thumb: One token corresponds to approximately 0.75 German words. Complex technical terms, foreign words or rare terms “cost” more tokens than everyday language.

Input vs. output tokens: the cost lever

This is where the decisive price difference lies: answers cost significantly more than questions for all providers. Claude Sonnet 4 costs 3 euros per million input tokens, but 15 euros per million output tokens – a factor of five.

Why this asymmetrical pricing? Text generation consumes exponentially more computing power than text comprehension.

💡Tip: Use token counter tools such as OpenAI’s Tokenizer or Claude’s Token-Counter for precise cost estimation before larger projects.

Context length as a price driver

Particularly treacherous: Gemini’s dynamic pricing. Below 200,000 tokens, you pay 1.25 euros per million input tokens. Above that, the price doubles to 2.50 euros.

Prompt caching allows you to save up to 90 percent of costs if you repeatedly make similar requests. Batch processing reduces costs by an additional 50 percent compared to individual requests.

The token mechanics ultimately determine your AI budget – if you understand them, you can optimize in a targeted manner and save hundreds of euros per month.

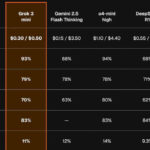

AI price comparison 2025: the hard figures

The AI market has changed dramatically in 2025. OpenAI cut prices by 26 percent, Google positioned itself as a discount provider, while Anthropic stuck with premium prices.

Claude family: Premium with price

Anthropic’s Claude models are the most expensive on the market, but offer the highest code quality:

- Claude Opus 4: 15 dollars input / 75 dollars output per million tokens

- Claude Sonnet 4: 3 dollars input / 15 dollars output

- Claude Haiku 4: 1 dollar input / 5 dollars output

Claude Opus justifies the 5-fold higher price compared to GPT-4.1 through superior performance with complex coding tasks and large output limits of up to 8,192 tokens per response.

OpenAI GPT portfolio: Aggressive pricing policy

OpenAI counters with drastic price reductions:

- GPT-4.1 (o3): 2 dollars input / 8 dollars output

- GPT-4o: 2.50 dollars input / 10 dollars output

- GPT-4o Mini: 0.15 dollars input / 6 dollars output

The new GPT-4.1 Mini costs only 0.40 dollars input and 1.60 dollars output – a direct attack on Google’s Flash model. Batch processing additionally reduces costs by up to 50 percent.

Google Gemini: Context-dependent costs

Google uses dynamic pricing as differentiation:

- Gemini 2.5 Pro: 1.25 dollars / 2.50 dollars (under 200,000 tokens)

- Gemini 2.5 Flash: 0.075 dollars / 0.30 dollars – lowest input price on the market

- Gemini 2.5 Ultra: approx. 10 dollars / 30 dollars (estimated)

The cost trap: If your prompt exceeds 200,000 tokens, the prices double to 2.50 dollars input and 10 dollars output. For long document analyses, Gemini can be more expensive than Claude Sonnet.

Gemini Flash dominates in high-volume scenarios with the lowest input price of 0.075 dollars per million tokens – ideal for content moderation or simple categorization.

Read also: Xcode 26.3: Agentic Coding with Claude & Codex

Practical example: Optimizing 100,000 marketing texts

Imagine this: Your content team has to create 500 product descriptions every day. A typical e-commerce scenario that can quickly become a cost trap.

Scenario structure: The numbers behind it

Our calculation example is based on a medium-sized online store with ambitious content targets:

- Daily production: 500 product descriptions

- Input per text: 200 tokens (product data, briefing, examples)

- Output per text: 150 tokens (optimized description)

- Monthly volume: 30 million input tokens plus 22.5 million output tokens

These figures correspond to around 15,000 product descriptions per month – a realistic scenario for growing e-commerce companies.

Cost comparison: the shocking differences

Monthly costs vary dramatically depending on the model you choose:

- Gemini Flash: 2.25 euros 6.75 euros = 9 euros per month

2 . Gemini Pro: 37.50 euros 56.25 euros = 93.75 euros per month

- GPT-4.1: 60 Euro 180 Euro = 240 Euro per month

- Claude Sonnet 4: 90 Euro 337.50 Euro = 427.50 Euro per month

The price difference between the cheapest and most expensive model is 47 times – a margin that can make or break your AI budget.

Quality check: When does 427 euros justify better texts?

The key question is: Does Claude Sonnet really generate 47 times better product descriptions than Gemini Flash?

A/B testing shows: The cheapest model is regularly sufficient for standardized product descriptions. However, complex explanatory texts or creative descriptions benefit considerably from premium models.

💡Tip: Use a hybrid approach – Gemini Flash for basic texts, Claude Sonnet for premium products. This will reduce costs by up to 80 percent while maintaining quality where it counts.

The key lies in targeted use: not every text needs premium quality, but every text needs the right AI for its purpose.

Recommended: Kimi k2.5 Release: The new AI competitor for GPT-4o & Claude?

Avoid hidden costs and price traps

The transparent token prices are just the tip of the iceberg. Real AI costs arise from hidden fees and unexpected price jumps that can quickly blow your budget.

Context window cost traps

Gemini’s dynamic pricing comes as an expensive surprise: with Gemini 2.5 Pro, the cost doubles from 1.25 to 2.50 euros per million input tokens as soon as your prompt exceeds 200,000 tokens.

The same pattern with Gemini Flash: prices increase dramatically from 128,000 tokens. What starts as a cheap 0.075 euro deal can quickly become a premium rate.

Prompt chunking as a solution: divide long documents into smaller sections. Instead of one 300,000-token prompt, you use three separate 100,000-token requests and stay in the low price segment.

API limits and overrun fees

Rate limits vary dramatically between providers:

- Claude: 40,000 tokens per minute (Opus), automatic queue

- GPT-4: 10,000 to 2 million tokens per minute depending on tariff

- Gemini: 1,000 to 4 million tokens per minute, depending on model

Automatic scaling versus fixed budgets determines cost control. Without limits, faulty loops in your code can burn thousands of euros in minutes.

Subscription versus pay-per-use

Monthly plans are worthwhile from around 50 million tokens per month. Below that, pay-per-use is cheaper; above that, subscriptions offer savings of up to 40 percent.

Enterprise discounts typically start from an annual volume of 100,000 euros and can reduce costs by 20 to 50 percent.

💡Tip: Implement monitoring tools such as OpenAI’s Usage Dashboard or custom alerts to detect cost explosions in real time and activate automatic stops.

The smartest strategy combines multiple models depending on the task: Gemini Flash for simple tasks, Claude Sonnet for complex analysis.

Tokenomics strategies for different use cases

The right choice of model can reduce or double your AI costs by a factor of 50. Here are the most proven use case-specific strategies that you can apply immediately.

High-volume, low-complexity tasks

For high-volume, low-complexity tasks, Gemini Flash dominates with 0.075 euros per million input tokens. That’s 95 percent less cost than Claude Opus.

Optimal model selection:

- Content moderation: Gemini Flash analyzes 1 million posts for 7.50 euros

- Simple translations: GPT-4o Mini translates at 0.15 euros per million tokens

- Data categorization: Batch processing reduces costs by a further 20 to 30 percent

💡Tip: Combine batch APIs with cache functions for recurring prompts – this reduces your costs by up to 90 percent.

Creative and complex tasks

Here, quality justifies the premium prices. Although Claude Opus costs 15 euros per million input tokens, it delivers measurably better results in code generation than cheaper alternatives.

Strategic model distribution:

- Code generation: Claude Opus for critical functions, Sonnet for standard code

- Long document analyses: Gemini Pro under 200,000 tokens (1.25 euros), above that Claude Sonnet

- Multi-step reasoning: GPT-4.1 combines quality with a fair price (2 euros per million)

SME vs. enterprise scenarios

Startup budget: The hybrid approach of Gemini Flash (volume tasks) and GPT-4o Mini (more complex requests) keeps monthly costs below 50 euros for typical SME volumes.

SME: Claude Sonnet establishes itself as the all-rounder champion – 3 euro input, 15 euro output offers the best price-performance ratio for mixed workloads.

Enterprise environments: Negotiated rates can undercut standard prices by 30 to 60 percent. Batch processing and dedicated instances become critical for multi-million token volumes.

The right tokenomics strategy starts with your specific use case and volume – not with the “best” model.

Related: GPT Image 1.5: 4x faster & more precise – OpenAI’s answer to Gemini

Legal aspects of AI use in Germany

When using Claude, GPT-4 and Gemini via APIs, specific legal risks arise that German companies need to be aware of. Tokenomics are only part of the overall costs – legal compliance can be significantly more expensive than the actual API fees.

GDPR compliance with AI APIs

Data processing outside the EU is unavoidable for all three providers. OpenAI, Anthropic and Google process your input on US servers, which constitutes a third country transfer.

Legally required:

- Data processing agreements (DPAs) with the AI providers

- Documentation of the legal basis for the data transfer

- Duty to inform data subjects about the use of AI

💡Tip: OpenAI and Anthropic now offer GDPR-compliant DPA templates – Google Gemini is still lagging behind here.

Copyright and generated content

AI-generated content has no copyright protection in Germany as it does not originate from humans. This means that your texts created with Claude or GPT-4 can be used freely by others.

Critical industries with special labeling requirements:

- Journalism: Transparency requirement for AI-generated articles

- Marketing: Prohibition of misleading information for non-labeled AI content

- Financial services: Compliance risks with automated advice

Drafting contracts and liability

The API terms of use of the providers contain disclaimers that are only effective to a limited extent in Germany. For example, OpenAI is not liable for incorrect GPT-4 outputs that lead to business losses.

Service Level Agreements (SLA) vary considerably:

- Claude: 99.5 percent availability on Enterprise plans

- GPT-4: No SLA guarantees in standard API access

- Gemini: Staggered SLAs depending on price level

ARAG expertise shows: Legal protection insurance policies regularly do not cover AI-related disputes – creating new insurance gaps for companies.

The ancillary legal costs of AI use are increasingly exceeding the pure tokenomics expenses. A proactive compliance strategy protects against expensive rectifications and fines.

Conclusion

You now have the tools to reduce your AI costs by up to 90 percent. Token mechanics not only determine your expenses, but also the quality of your results – those who master them will gain a decisive cost advantage.

The most important insights that can be implemented immediately:

- Use a hybrid approach: Gemini Flash for volume tasks, Claude Sonnet for complex tasks – save up to 80% while maintaining quality

- Monitor context window: Stay under 200,000 tokens with Gemini = cut costs in half

- Activate batch processing: 50% discount on all large projects through intelligent request bundling

- Implement prompt caching: Recurring requests cost 90% less through clever cache usage

- Set up usage monitoring: Automatic limits prevent cost explosions due to faulty loops

Your next steps today:

Test the token counter of your preferred provider with a typical project from your everyday life. Calculate the monthly costs for different models. Implement batch APIs immediately for larger projects – the savings will amortize the setup effort after just a few days.

The AI revolution won’t happen someday – it’s happening now. Those who master tokenomics will not only use AI smarter, but also cheaper than the competition. Time to secure your competitive edge.