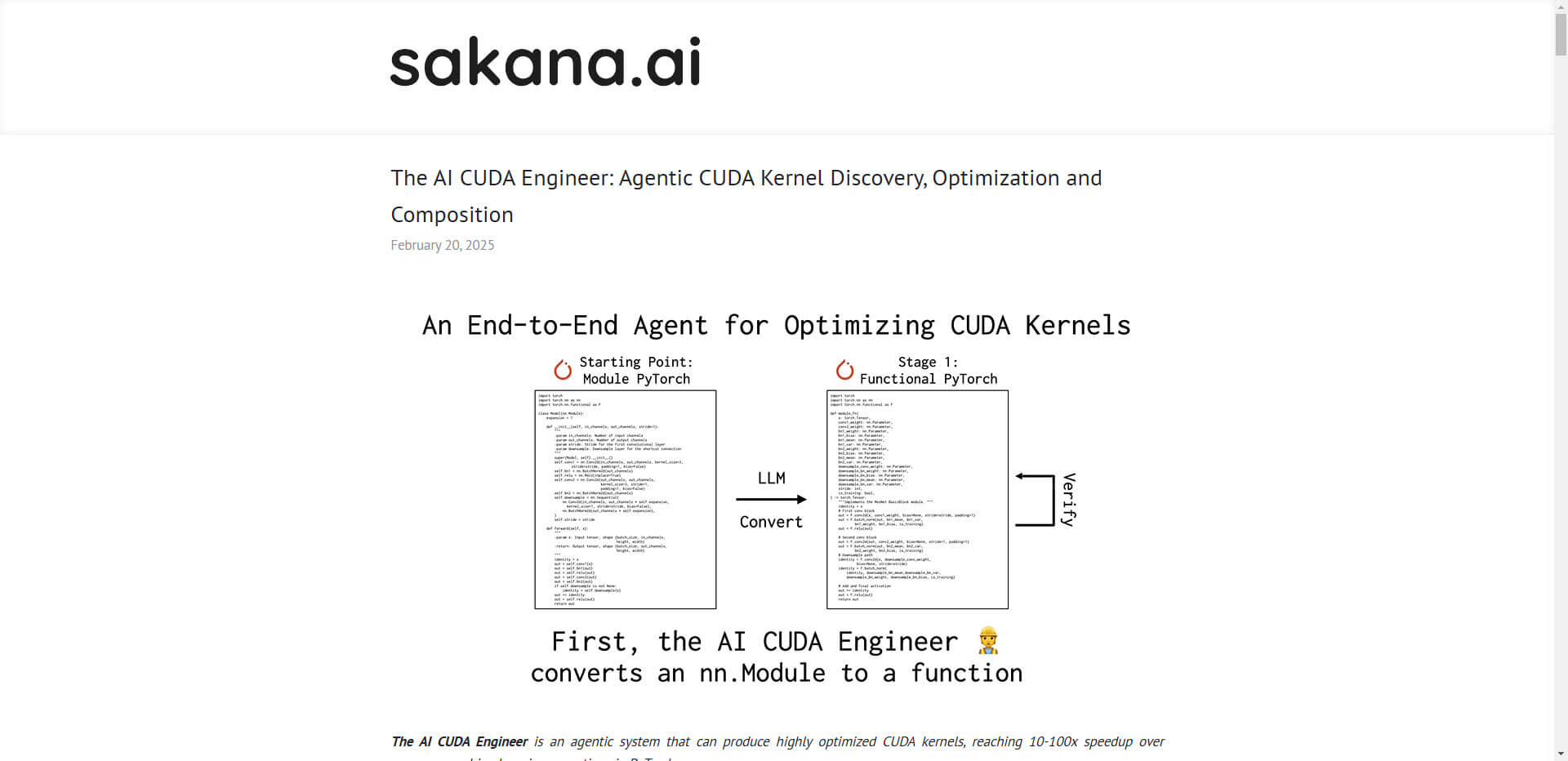

Sakana AI has opened a new chapter in the world of artificial intelligence and high-performance computing with the launch of AI CUDA Engineer. This innovative solution enables the automatic creation of highly optimized CUDA kernels for machine learning processes – a step that not only drastically increases the efficiency of models, but also shapes the future of sustainable AI systems.

A paradigm shift in AI development

The AI CUDA Engineer revolutionizes the traditional development process of CUDA kernels by automatically translating PyTorch code into optimized CUDA kernels with a success rate of over 90%. This automation leads to enormous speed increases. Instance normalization was up to 381 times faster in tests, while matrix multiplications were 147 times faster. Overall, the engineer achieved a median increase in execution time of 1.34 times over 250 tasks compared to native PyTorch solutions.

Such evolutionary progress is supported by an optimized methodology: evolutionary algorithms, such as “crossover” operations and an “innovation archive”, continuously drive performance upwards. This approach not only allows translations from the PyTorch framework, but also improves the efficiency of the code, which is particularly important for the increasing flood of data and increasing computational effort of GPU-based AI optimization.

Implications for the industry and current trends

The release of the AI CUDA Engineer Archive, a dataset of over 30,000 CUDA kernels, including more than 17,000 verified implementations, is a valuable resource for research and development within the AI industry. This dataset is made available via HuggingFace under the CC-By-4.0 license – a positive signal for collaboration and further development in the community.

Such developments reflect the growing trend of using AI to optimize AI systems. This “meta-learning” approach has the potential to make complex models more efficient, reduce the costs of training processes and use resources more sustainably. At the same time, consideration must be given to how these technologies can be integrated and evaluated in scientific and industrial environments in the future.

Opportunities and challenges of AI optimization systems

Although tools such as the AI CUDA Engineer show remarkable progress, parallel developments – such as the AI scientist from Sakana AI – are a reminder of existing challenges. Despite automation and increased efficiency, questions remain about the quality of research results and the autonomy of AI systems. The scientific community is faced with the task of defining benchmarks for AI-based research tools in order to guarantee their reliability and benefits in the long term.

For companies and developers, this wave of AI innovations means new opportunities to increase operational efficiency. At the same time, it signals the need for strategic investment in training and technology to remain competitive.

The most important facts about the update

- Automation potential: Over 90% of PyTorch operations can be automatically translated into CUDA format.

- Enormous speedups: Speedups between 10x to 100x for machine learning; up to 381x speedups for specific operations.

- Saving resources: Optimization systems such as the AI CUDA Engineer make computing processes more sustainable and faster.

- Open research: Publication of the AI CUDA Engineer Archive as an open-source data set on HuggingFace.

- Meta-learning trend: The use of AI systems to optimize other AI brings the industry closer to the goal of sustainably efficient systems.

Source: Sakana.ai