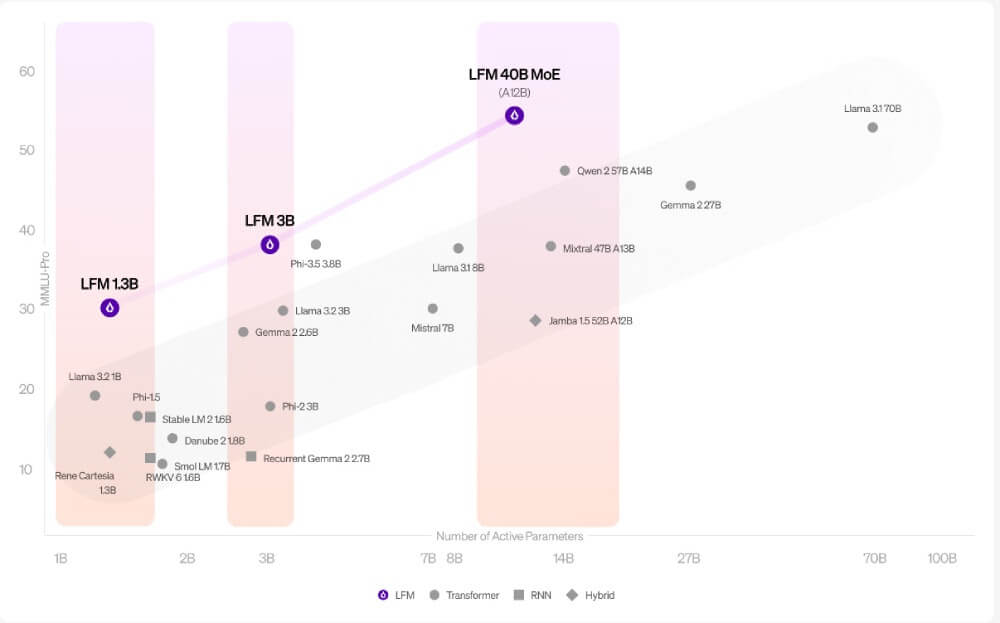

A remarkable change is taking place in the world of artificial intelligence. The Liquid Foundation Models (LFMs) break with traditional transformer models and offer a more innovative architecture for scalable, efficient, and multi-modal generative AI.

Liquid AI’s LFMs enable native support for different data modalities such as text, audio, images and video. This is achieved through a robust featureization architecture that allows different data types to be processed in a unified framework. This comprehensive processing under one roof sets LFMs apart from their predecessors and speeds up the execution of complex tasks.

A key aspect of LFMs is their ability to be efficiently configured and customized on a variety of hardware platforms. Targeted optimization for use on edge devices, in cloud environments and on specialized hardware components such as GPUs and AI accelerators paves the way for smooth integration into numerous deployment environments. This versatility predestines the LFMs for use in real-world applications and opens up new horizons for efficiency in AI usage.

The ambitious technical foundation of LFMs is characterized by specialized units for token and channel mixing that ensure efficient information flows. This design significantly reduces the number of computations required per task, which shortens both training and inference times. This leads to faster iteration of models, rapid implementation of new features and an optimized feedback loop for continuous improvement.

Embedded with a scalability and flexibility that helps organizations efficiently expand their AI processes, LFMs offer a solution for versatile tasks. Faced with the challenge of handling tasks involving different types of data, LFMs offer a cost-effective alternative to traditional language models. Sustainable operation through reduced energy consumption could also help reduce operating costs, which is particularly important for companies looking to minimize their environmental impact.

The most important facts about the update:

- LFMs provide native support for multiple data modalities through a unified architecture.

- Optimizations for different hardware environments ensure a wide range of applications.

- The architecture reduces the computing effort, which leads to faster training and inference times.

- LFMs impress with their scalability and flexibility, especially for real-world applications.

- Although not currently open source, Liquid AI follows an open science philosophy with future plans to make relevant data accessible.

The development of the Liquid Foundation Models represents a significant step forward for the AI industry. The enhanced capabilities and efficient resource utilization of these models offer promising opportunities for companies, but also give rise to discussion about broader implementation and ensuring compatibility within existing technological structures.

Sources: Liquid Foundation Models